Raising Scientific Experts

By Nancy L. Jones

Competing interests threaten the scientific record, but courage and sound judgment can help

Competing interests threaten the scientific record, but courage and sound judgment can help

DOI: 10.1511/2011.93.458

I would be a wealthy woman if I had a dollar for each time a student, a postdoctoral fellow, one of my colleagues—or even I—moaned and groaned about the capriciousness of scientific peer review. Some newbies are stymied in front of their computer keyboards for months as they write their first manuscript, trying to organize their meandering paths of research and messy, gray data into logical experimental designs and strong conclusions. Others, demoralized by pithy, anonymous critiques (surely from their toughest competitors), have to muster all their restraint to keep from writing scathing, retaliatory responses to their reviewers. I remember my own qualms on one of the first occasions that I evaluated grant proposals with a panel of reviewers. I felt certain that my lack of gamesmanship was the reason a few outstanding applications were not funded. While I reviewed my assignments using the criteria given, other reviewers adamantly championed—and got more attention for—the best of the proposals they evaluated. No one had prepped me for how the review committee would operate.

Illustration by Tom Dunne.

Peer review is one of the central activities of science, but students and trainees are often ill prepared to assume their duties as authors and reviewers. Clearly, there is more to peer review and publication than factual knowledge and technical skills. Science is a culture. To succeed, we need to nimbly navigate within our professional culture. There is much that training programs can do to instill professionalism in the next generation of scientists, and I will outline some of the approaches that my colleagues and I used to develop an ethics and professionalism curriculum at Wake Forest University School of Medicine (WFUSM). But first, if we are to aspire to excellence in scientific publication—and train young scientists to do the same—it is important to understand the purpose of scientific publishing and the competing interests that may compromise it.

The central role of publication is to create a record that advances collective knowledge. When research and scholarship are published in a peer-reviewed journal, it means that the scientific community has judged them to be worthwhile contributions to the collective knowledge. That is not to say a publication represents objective truth: All observations are made in the context of the observer’s own theories and perceptions. Research findings must, therefore, be reported in an accurate and accessible way that allows other scientists to draw their own conclusions. Readers should be able to reinterpret the work in light of new knowledge and to repeat experiments themselves, rather than rely solely on the authors’ interpretations.

The nature of scientific progress also requires that the scientific record include negative results and repetitions of previous studies. Reporting both positive and negative results informs future work, prevents others from retracing wrong avenues and demonstrates good stewardship of limited resources. Out of respect for the contributions of research subjects, especially humans and other primates, some argue that there is a moral imperative to publish negative results. Doing so can prevent unnecessary repetition of experiments. On the other hand, reproducibility itself is a cornerstone of science. There must be a place to report follow-up studies that confirm or refute previous findings.

Finally, scientific discourse should embrace the principle of questioning certitude—reevaluating the resident authoritative views and dogmas in order to advance science. The scientific record should challenge the current entrenched ideas within a field by including contributions from new investigators and other disciplines. Examining novel ideas and allowing them to flourish helps the scientific community uncover assumptions, biases and flaws in its current understanding.

In an ideal world, peer review is the fulcrum that ensures the veracity of each research report before it enters the scientific record. The prima facie principle for the practice of science is objectivity, but we all know that true objectivity is impossible. Therefore, science relies on evaluation by subject-matter experts—peer reviewers—who assess the work of other researchers. They critique the experimental design, models and methods and judge whether the results truly justify the conclusions. Reviewers also evaluate the significance of each piece of research for advancing scientific knowledge. This neutral critique improves the objectivity of the published record and assures that each study meets the standards of its field.

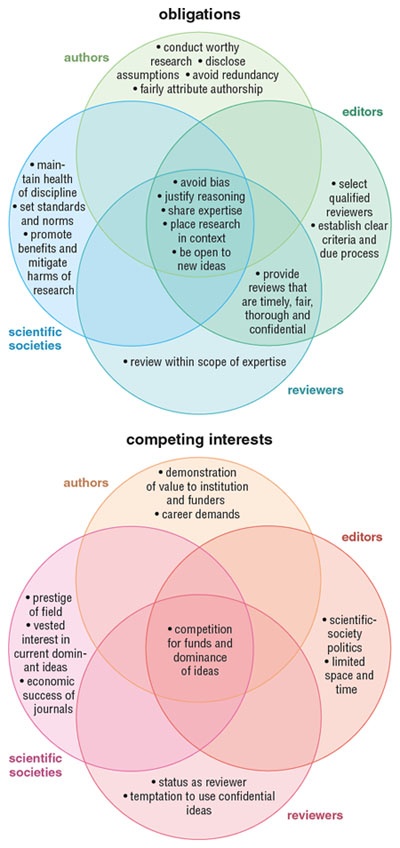

For peer review to serve its intended function, authors, reviewers, editors and scientific societies must uphold certain ethical obligations, detailed in the figure on the following page. In short, authors must do their best to conduct sound, worthwhile research and openly share the results. Reviewers must be open about potential conflicts of interest, and they must provide critiques that are fair, thorough and timely. And scientific societies, as guardians and gatekeepers of their specific spheres of knowledge, must provide a normative process that ensures the rigor and validity of published results.

In fact, the scientific record serves other purposes besides advancing collective knowledge. As a result, highly charged ethical conundrums emerge throughout the publication process. Science is an interactive process conducted by humans who have their own aspirations and ambitions, which give rise to competing interests—some of which are listed in the figure below. The inescapable conflict in science is each individual’s underlying self-interest and commitment to promoting his or her own ideas. Furthermore, authorship is the primary currency for professional standing. It is necessary for credence and promotion within one’s home institution and the scientific community, and is essential to securing research funds.

Illustration by Tom Dunne.

Indeed, the requirement that scientists obtain grants to support their research and salaries, coupled with funders’ accountability to the public for its investment in science, puts intense strain on the system. Increasingly, the publication record is used to weigh whether public funding for science is worthwhile. U.S. investment in science and technology has long been tied to the idea that science will give our society progress and improve our prosperity, health and security. That perspective was famously articulated in 1945 by Vannevar Bush, who was then director of the U.S. Office of Scientific Research and Development, and it continues to shape funding for science today.

Although public investment in research has sped the progress of science, it has also placed scientific communities in an advocacy role. They are no longer just the guardians of knowledge; they compete for public resources and champion their specific fields. Their advocacy cases are often based heavily on promoting the potential outcomes of research—such as cures, solutions and new economic streams—rather than justifying support for the research itself. The scientific record is not immune to this pressure. Scientific societies that publish journals can be tempted to boost the prestige of their fields by prioritizing highly speculative, sexy articles and by egregiously overpromoting the potential impact of the research.

Such overpromising is particularly problematic because of the pervasiveness in our society of scientism and scientific optimism, which hold that scientific knowledge is truth. According to the philosophy of scientism, science is universal and above any cultural differences. It is immune to influences from an investigator’s psychological and social milieu or gender, and even to the scientific community’s own assumptions and politics. Under the influence of scientism, the public, media and policy makers can be tempted to apply research results without exercising the judgment needed to put them in context. Individuals who are deeply vested in scientific optimism can have difficulty seeing any potential harm as science “moves us toward utopia.” They may even become confused about science’s ability to make metaphysical claims about what life means.

But the epistemology of science (how science knows what it knows) cannot support these unreasonably optimistic conclusions. Scientific knowledge is tentative. It forms through an ongoing process of consensus making as the scientific community draws upon empirical evidence as well as its own assumptions and values. And scientific models—classification schemes, hypotheses, theories and laws—are conceptual inventions that can only represent our current best understanding of reality. Although these models are essential tools in science, we must continually remind ourselves, our students and the public that conceptual models are not reality. Nor is a research article—even a peer-reviewed publication—the truth.

Authors, reviewers and editors must take care to accurately communicate the kind of scientific knowledge addressed in any given publication, as well as its limitations. Authors should pay careful attention to inherent biases in their work and tone down overly optimistic conclusions. Reviewers and editors must correct any remaining inflation of the interpretations and conclusions. And scientific societies need to provide an adequate understanding of the process of science. They must convey levelheaded expectations about the speculative nature of any individual study and about the time and resources that will be needed to realize the public’s investment in a field of research. Otherwise, the continued projection of scientism—science is always progress—will erode trust in science at a much more fundamental level than will the few flaws and misconduct cases that surface in the scientific record itself.

More than ever before, acquiring technical skills does not assure success as a scientist. Survival depends on operating with finesse, using what are often called soft skills. Of course scientific communities have an obligation to train their future scientists in the conceptual and methodological tools for conducting research. But they must also train students to function within the scientific culture, based on a thorough understanding of the norms, standards and best practices in the student’s specific discipline.

At WFUSM, several of my colleagues and I pioneered a curriculum to promote professionalism and social responsibility within science. Our goal was to equip our students with the tools to navigate the challenging research culture with high integrity. These included soft skills such as the ability to recognize ethical issues within the practice of science, solve problems, work in groups, articulate and defend one’s professional judgment and critique the judgment of one’s peers. We also wanted to develop within each student an identity as a scientific professional, acculturated to the standards of the discipline through open communication with peers and role models.

To work toward these goals, we chose a problem-based learning format, to which my colleagues later added some didactic lectures. Problem-based learning is structured around authentic, engaging case studies and requires that students gain new knowledge to solve problems in the cases. After a scenario is introduced in one class session, students seek out relevant information on their own, then apply that knowledge to the case during the next class session. Students work actively in groups, with guidance from facilitators (faculty and postdoctoral fellows) who serve as cognitive coaches rather than content experts.

In our curriculum, the scenarios were designed to provide a realistic understanding of the practice of science and to prompt discussion of the norms and best practices within the profession. They also required students to identify ways that the various stakeholders—principal investigators, postdoctoral fellows, graduate students, technicians, peer reviewers and others—could manage their competing interests. We constructed activities and discussion questions so that different cases stressed distinct types of moral reflection. For example, we introduced two moral-reasoning tools, each one a set of questions that students could use to systematically sift through the principles, values and consequences in the cases. (Questions included, for instance, “What are the issues or points in conflict?” and “Can I explain openly to the public, my superiors or my peers my reasons for acting as I propose?”) Some sessions focused on moral character and competence by requiring students to solve problems and defend their decisions. Others called for students to take the perspective of a professional scientist, thereby building a sense of moral motivation and commitment. Finally, some cases cultivated moral sensitivity by presenting the perspectives of multiple stakeholders and promoting awareness of legal, institutional and societal concerns. Facilitators gave students feedback on their reasoning, moral reflection, group skills and ability to analyze problems. During a debriefing activity at the end of each case, students identified which concrete learning objectives they had accomplished. They also discussed how they were functioning as a group and what they could do to improve their team dynamic.

The curriculum addressed a range of issues in ethics and professionalism, among which peer review and authorship were important themes. Cases on scientific authorship required students to investigate, between class meetings, the criteria by which their own laboratory groups, departments, institution and professional networks assigned authorship credit. Back in class, each small group collectively assembled a standard operating procedure for assigning credit, and applied it to resolve the authorship problem in the scenario. Cases on peer review called attention to the various roles of the author, the reviewer and the editor in evaluating a manuscript. Students identified essential elements of a well-done review, the greatest ethical risks for a reviewer and strategies to mitigate those risks. Students then applied this information in their discussions of a case study in which an up-and-coming researcher was asked to review grant proposals that could influence her own research or affect a friend’s career.

Although my colleagues and I are confident that students benefited from our curriculum, it takes more than a professionalism course to really nurture a scientific expert. Opportunities to improve and test one’s understanding of scientific culture and epistemology should be pervasive throughout the training experience. To refine one’s judgment requires extensive practice, a supportive climate and constructive feedback. This means that mentors, graduate programs, societies and funders must value time away from producing data. Fortunately, scientific knowledge is not the data; it is how we use the data to form and refine conceptual models of how the world works. So activities that improve scientific reasoning and judgment are worth the investment of time.

More attention must be paid to the epistemology of science and the underlying assumptions of the tools of the trade. As methods and experimental approaches become entrenched in a field, rarely do students return to the rich debates that established the current methodology. This lack of understanding comes to light when prepackaged test kits, fancy electronic dashboard controls and computer-generated data tables fail to deliver the expected results. To interpret their own research and critique that of their peers, scientists need to understand the basis of the key conceptual models in their discipline.

The best way to develop sound scientific judgment is to engage with the scientific community—friend and foe alike—to articulate, explain and defend one’s positions and to be challenged by one’s peers. This learning process can take place in laboratory discussions, journal clubs, department seminars and courses, as well as during professional-society functions and peer-review activities. As my colleagues and I learned from the evaluations of our problem-based learning course, students (and faculty) need explicit instruction on the goals, expectations and skills of these non-didactic activities. After a laboratory discussion, for example, time could be spent reviewing what was learned, giving feedback on how to develop soft skills, and providing an opportunity to collectively improve the group process. Students should be evaluated on their meaningful participation in these community activities.

Professional societies also have an important role to play in fostering professionalism among young scientists. As Michael Zigmond argued in “Making Ethical Guidelines Matter” (July–August), societies are uniquely positioned to develop effective, discipline-specific codes of conduct to guide the standards of their professions. Criteria and practical guides to authorship and peer review are important—but they’re not enough. We must open the veil and show how seasoned reviewers apply those criteria. Discussions among reviewers and editors about how they put the guidelines into practice are the best way to move forward ethically. Indeed, such rich exchanges should be modeled in front of the entire research community, especially for students, showing how different individuals apply the criteria to critique a paper or proposal and then respectfully challenge each other’s conclusions. Societies and funders could also provide sample reviews with commentary on their strengths and weaknesses, and how they would be used to make decisions about publication or funding.

As graduate programs and societies implement such programs, they also need to ask themselves if their activities are actually conducive to open scientific dialogue. Sometimes, the cultural climate stifles true engagement by tolerating uncollegial exchange or by allowing participants to float in unprepared for substantive discussion. There must be a spirit of collective learning that allows students to examine the assumptions and conceptual models that are under the surface of every method and technique. No question should be too elementary. Arrogant, denigrating attitudes should not be tolerated.

Finally, scientists should foster commitment to their profession and its aspirations and norms. This goal is best accomplished through frank discussions about how science really works and about the various competing interests that pull on a scientist’s obligations as author, peer reviewer and, sometimes, editor. Many students enter the community vested in scientism—living above the ice-cream parlor, if you will. Viewing life through those idealistic, optimistic lenses causes them to stumble into an epistemological nightmare the first time they try to make black-and-white truth out of their confusing data. Or even worse, they become sorely pessimistic after naively smacking headfirst into the wall of disillusionment when trying to publish in a prestigious journal or competing, for the first time, for an independent research grant. We must provide opportunities that afford socialization around the principles, virtues and obligations of science. Our faculty and trainees should freely discuss how they have dealt with their own competing interests and managed conflicts within peer review and authorship. All participants need to enter these conversations with a willingness to learn from others and address how to improve the culture.

I’ll end by positing a new definition of professionalism: A scientist, in the face of intense competing interests, aspires to apply the principles of his or her discipline to support the higher goal of science—to ethically advance knowledge for the good of humankind. Professionalism takes courage, but when leaders display this courage, the journey for those who follow is better.

This article does not represent NIH views, nor was it part of the author's professional NIH activities.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.