Foolproof

By Brian Hayes

Mathematical proof is foolproof, it seems, only in the absence of fools

Mathematical proof is foolproof, it seems, only in the absence of fools

DOI: 10.1511/2007.63.10

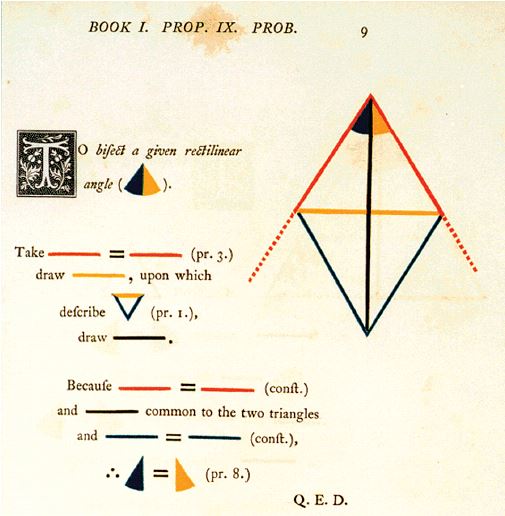

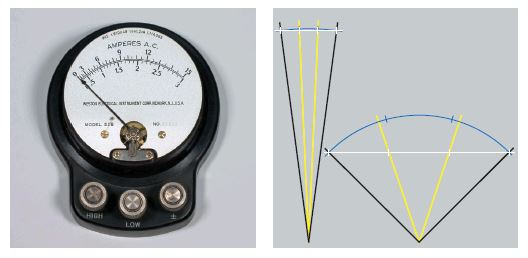

I was a teenage angle trisector. In my first full-time job, fresh out of high school, I trisected angles all day long for $1.75 an hour. My employer was a maker of volt-meters, ammeters and other electrical instruments. This was back in the analog age, when a meter had a slender pointer swinging in an arc across a scale. My job was drawing the scale. A technician would calibrate the meter, recording the pointer's angular deflection at a few key intervals—say 3, 6, 9, 12 and 15 volts. When I drew the scale, using ruler and compass and a fine pen, I would fill in the intermediate divisions by interpolation. That's where the trisection of angles came in. I was also called upon to perform quintisections and various other impossible feats.

Brian Hayes

I joked about this with my coworker and supervisor, Dmytro, who had been drawing meter scales for some years. We should get extra pay, I said, for solving one of the famous unsolvable problems of antiquity. But Dmytro was a skeptic, and he challenged me to prove that trisection is impossible. This was beyond my ability. I did my best to present an outline of a proof (after rereading a Martin Gardner column on the topic), but my grasp of the mathematics was tenuous, my argument was incoherent, and my audience remained unconvinced.

On the other hand, Dmytro himself quickly produced visible evidence that the specific method of trisection we employed—drawing a chord across the angle and dividing it into three equal segments—gave incorrect results when applied to large angles. After that, we made sure all the angles we trisected were small ones. And we agreed that the whole matter was something we needn't discuss with the boss. Our circumspect silence was a little like the Pythagorean conspiracy to conceal the irrationality of √(2).

Looking back on this episode, I am left with vague misgivings about the place of proof in mathematical discourse and in everyday life. Admittedly, my failure to persuade Dmytro was entirely a fault of the prover, not of the proof. Still, if proof is a magic wand that works only in the hands of wizards, what is its utility to the rest of us?

Here is how proof is supposed to work, as illustrated by an anecdote in John Aubrey's Brief Lives about the 17th century philosopher Thomas Hobbes:

He was 40 yeares old before he looked on geometry; which happened accidentally. Being in a gentleman's library in..., Euclid's Elements lay open, and 'twas the 47 El. libri I. He read the proposition. "By G—," sayd he (he would now and then sweare, by way of emphasis), "this is impossible!" So he reads the demonstration of it, which referred him back to such a proposition; which proposition he read. That referred him back to another, which he also read. Et sic deinceps, that at last he was demonstratively convinced of that trueth. This made him in love with geometry.

What's most remarkable about this tale—whether or not there's any trueth in it—is the way Hobbes is persuaded against his own will. He starts out incredulous, but he can't resist the force of deductive logic. From proposition 47 (which happens to be the Pythagorean theorem), he is swept backward through the book, from conclusions to their premises and eventually to axioms. Though he searches for a flaw, each step of the argument compels assent. This is the power of pure reason.

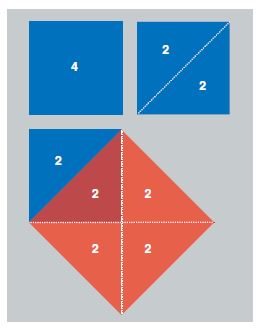

For many of us, the first exposure to mathematical proof—typically in a geometry class—is rather different from Hobbes's middle-age epiphany. A nearer model comes from another well-worn story, found in Plato's dialogue Meno. Socrates, drawing figures in the sand, undertakes to coach an untutored slave boy, helping him to prove a special case of the Pythagorean theorem. I paraphrase very loosely:

Socrates:Here is a square with sides of length 2 and area equal to 4. If we double the area, to 8 units, what will the length of a side be?

Boy: Umm, 4?

Socrates: Does 4*4=8?

Boy: Okay, maybe it's 3.

Socrates: Does 3*3=8?

Boy: I give up.

Socrates:Observe this line from corner to corner, which the erudite among us call a diagonal. If we erect a new square on the diagonal, note that one-half of the original square makes up one-fourth of the new square, and so the total area of the new square must be double that of the original square. Therefore the length of the diagonal is the length we were seeking, is it not?

Boy: Whatever.

At this point I trust we are all rooting for the kid. I would like to be able to report that the dialogue continues with the boy taking the initiative, saying something like, "Okay, dude, so what's the length of your erudite diagonal? It's not 4 and it's not 3, so what is it, exactly?" Alas, Plato reports no such challenge from the slave boy.

The problem with the Meno proof is exactly the opposite of the one I faced when I was an untutored wage slave. Whereas I was too inept and intellectually ill-equipped to craft a proof that would persuade my colleague (or even myself, for that matter), Socrates is a figure of such potent authority that the poor kid would surely go along with anything the master said. He would put up no resistance even if Socrates were proving that 1=2. It's hard to believe that the boy will go on to prove theorems of his own.

Sadly, Hobbes didn't get much more benefit from his own geometry lesson. He became a notorious mathematical crank, claiming to have solved all the most famous problems of classical geometry, including the trisection of the angle, the squaring of the circle and the doubling of the cube. These claims were a little less foolish in the 17th century than they would be now, since the impossibility of the tasks had not yet been firmly established. Nevertheless, Hobbes's contemporaries had no trouble spotting the gaffes in his proofs.

In recent years proof has become a surprisingly contentious topic. One thread of discord began with the 1976 proof of the four-color-map theorem by Kenneth Appel, Wolfgang Haken and John Koch of the University of Illinois at Urbana-Champaign. They showed that if you want to color a map so that no two adjacent countries share a color, four crayons are all you'll ever need. The proof relied on computer programs to check thousands of map configurations. This intrusion of the computer into pure mathematics was greeted with suspicion and even disgust. Haken and Appel reported a friend's comment: "God would never permit the best proof of such a beautiful theorem to be so ugly." Apart from such emotional and aesthetic reactions, there was the nagging question of verification: How can we ever be sure the computer didn't make a mistake?

Some of the same issues have come up again with the proof of the Kepler conjecture by Thomas C. Hales of the University of Pittsburgh (with contributions by his student Samuel P. Ferguson). The Kepler conjecture—or is it now the Hales-Ferguson theorem?—says that the pyramid of oranges on a grocer's shelf is packed as densely as possible. Computations play a major part in the proof. Although this reliance on technology has not evoked the same kind of revulsion expressed three decades ago, worries about correctness have not gone away.

Hales announced his proof in 1998, submitting six papers for publication in Annals of Mathematics. The journal enlisted a dozen referees to examine the papers and their supporting computer programs, but in the end the reviewers were defeated by the task. They found nothing wrong, but the computations were so vast and formless that exhaustive checking was impractical, and the referees felt they could not certify the entire proof to be error-free. This was a troubling impasse. Eventually the Annalspublished "the human part of the proof," excluding some of the computational work; the full proof was published last summer in Discrete and Computational Geometry. Interestingly, Hales has turned his attention to computer-assisted methods of checking proofs.

Brian Hayes

In the case of another famously problematic proof, we can't put the blame on computers. An effort to classify the mathematical objects known as finite simple groups began in the 1950s; the classification amounts to a proof that no such groups exist outside of five known categories. By the early 1980s the organizers of the project believed the proof was essentially complete, but it was scattered across 500 publications totaling at least 10,000 pages. Three senior authors undertook to revise and simplify the proof, bringing together the major ideas in one series of publications. The process, still unfinished, is testing the limits of the human attention span—and lifespan. (One of the leaders of the revision program died in 1992.)

If some proofs are too long to comprehend, others are too terse and cryptic. Four years ago the Russian mathematician Grigory Perelman announced a proof of the Poincaré conjecture. This result says—here I paraphrase Christina Sormani of Lehman College—that if a blob of alien goo can ooze its way out of any lasso you throw around it, then the blob must be nothing more than a deformed sphere, without holes or handles. Everyday experience testifies to this fact for two-dimensional surfaces embedded in three-dimensional space, and the conjecture was proved some time ago for surfaces (or "manifolds") of four or more dimensions. The hard case was the three-dimensional manifold, which Perelman solved by proving a more-general result called the geometrization conjecture.

Perelman's proof is not easy reading. Sormani explains its strategy as "heating the blob up, making it sing, stretching it like hot mozzarella and chopping it into a million pieces." I applaud the vividness of this description, and yet it has not helped me follow Perelman's logic. Given the difficulty of the work, others stepped in to explicate and elaborate, publishing versions of the proof considerably longer than the original. These were not popularizations aimed at the general public; they were meant to explain the mathematics to mathematicians. Controversy ensued. Were the explicators trying to claim a share of the glory? Did they deserve a share? In August Perelman was awarded a Fields Medal, the biggest prize in mathematics, which may put an end to the controversy. (Perelman refused the prize.)

These incidents and others like them have led to talk of a crisis in mathematics, and to fears that proof cannot be trusted to lead us to eternal and indubitable truth. Already in 1972 Philip J. Davis of Brown University was writing:

The authenticity of a mathematical proof is not absolute but only probabilistic.... Proofs cannot be too long, else their probabilities go down, and they baffle the checking process. To put it another way: all really deep theorems are false (or at best unproved or unprovable). All true theorems are trivial.

A few years later, in Mathematics: The Loss of Certainty, Morris Kline portrayed mathematics as a teetering superstructure with flimsy timbers and a crumbling foundation; continuing with this architectural conceit, he argued that proofs are "a façade rather than the supporting columns of the mathematical structure."

Davis and Kline both wrote as mathematical insiders—as members of the club, albeit iconoclastic ones. In contrast, John Horgan positioned himself as a defiant outsider when he wrote a Scientific American essay titled "The Death of Proof" in 1993. "The doubts riddling modern human thought have finally infected mathematics," he said. "Mathematicians may at last be forced to accept what many scientists and philosophers already have admitted: their assertions are, at best, only provisionally true, true until proved false."

My own position as an observer of these events is somewhere in the awkward middle ground, neither inside nor outside. I am certainly not a mathematician, and yet I have been an embedded journalist in the math corps for so long that I cannot claim detachment or impartiality. I report from the no man's land between the fences.

I do believe there is a kind of crisis going on—but only because the entire history of mathematics is just one crisis after another. The foundations are always crumbling, and the barbarians are always at the gate. When Haken and Appel published their computer-aided proof, it was hardly the first time that a technical innovation had stirred up controversy. In the 17th century, when algebraic methods began intruding into geometry, the heirs of the Euclidean tradition cried foul. (Hobbes was one of them.) At the end of the 19th century, when David Hilbert introduced nonconstructive proofs—saying, in effect, "I know x exists, but I can't tell you where to look for it"—there was another rebellion. Said one critic: "This is not mathematics. This is theology."

Brian Hayes

All in all, the crisis of the present moment seems mild compared with that of a century ago, when paradoxes in set theory led to Gottlob Frege's lament, "Alas, arithmetic totters." In response to that crisis, a rescue party of ambitious mathematicians, led by Hilbert, set out to rebuild the edifice of mathematics on a new foundation. Hilbert's plan was to apply the process of proof to proof itself, showing that the axioms and theorems of mathematics can never lead to a contradiction—that you can never prove both "x" and "not x." The outcome is well known: Kurt Gödel proved instead that if you insist on consistency, there are true statements you can't prove at all. You might think that such a Tower of Babel catastrophe would scatter the tribes of mathematics for generations, but mathematicians have carried on.

That some of the latest proofs from the frontiers of mathematical research are difficult and rely on novel tools seems to me utterly unexceptional. Of course the proofs are hard to digest; they were hard to create. These are solutions to problems that have stumped strong minds for decades or centuries. When Perelman's proof of the Poincaré conjecture defeats my attempts at understanding, this is a disappointment but not a surprise. (I can't keep pace with Olympic marathoners either.) If I have a worry about the state of mathematics, it's not the forbidding inaccessibility of the deepest thinkers; rather it's my own clumsiness when I tackle perfectly humdrum problems, far from the frontiers of knowledge.

Donald E. Knuth of Stanford University once appended a note to a computer program: "Beware of bugs in the above code; I have only proved it correct, not tried it." Coming from Knuth, the warning is a joke, but if you hear it from me, you should take it seriously.

Allow me to bring back Socrates and the slave boy for a little exercise in probability theory. They are arguing about sports: In a best-of-seven tournament (such as the baseball World Series), what is the probability that the contest will be decided in a four-game sweep? Assume that the teams are evenly matched, so that each team has a 50-50 chance of winning any single game.

Socrates: In a best-of-seven series, how many ways can a team score a clean sweep?

Boy: Just one way. You've got to win four in a row, with no losses.

Socrates: And how many ways could we form a five-game series, with four wins and a single loss?

Boy: Well, you could lose either the first, the second, the third or the fourth game, and win all the rest.

Socrates: And what about losing the fifth game?

Boy: If you win the first four, you don't play a fifth.

Socrates: So to build a five-game series, we take a four-game series and insert an additional loss at each position except the last, is that right?

Boy: I guess.

Socrates: Therefore we can create a six-game tournament by taking each of the five-game series and inserting an additional loss in each of five positions. Thus we have 4*5, or 20, six-game series.

Boy: If you say so.

Socrates: Then each of the 20 six-game series can be expanded in six different ways to make a seven-game series, and so there are 120 seven-game variations. Adding it all up, we have 1+4+20+120 cases, for a total of 145. Exactly one of these cases is a clean sweep, and so the probability is 1/145.

At this point the boy, who knows something about baseball, points out that of 98 best-of-seven World Series, 19 have been won in a clean sweep, suggesting an empirical probability nearer to 1?5 than to 1?145. Socrates is not swayed by this fact.

Socrates: Do not be distracted by mere appearances; here we study ideal baseball. Let me demonstrate by another method.

Boy: Go for it.

Socrates: Suppose for the moment that the teams always play a full schedule of seven games. Since each game can have either of two outcomes, there are 27, or 128, possible sequences. Now go through the list of 128 patterns and remove all of those in which play continues after a team has already achieved four victories. I find that there are 70 distinct patterns remaining. Two of those patterns are clean sweeps—one for each team—and so the probability is 1?35.

Boy: You're getting warmer. Try this: Winning four games in a row has a probability of 1/2 * 1/2 * 1/2 * 1/2, or 1/16. Either team can have the clean sweep, so the combined probability is 1/16 + 1/16=1/8.

Deductive logic has led us to three different answers, at least two of which must be wrong. Although I have made Socrates the fool in this comedy of errors, I cannot conceal that the blunders are actually my own. A few years ago I had occasion to perform this calculation, and I got a wrong answer. How did I know it was wrong? It disagreed with a simple computer experiment: a program that simulated a million random World Series and produced 124,711 four-game clean sweeps. (That's almost exactly 1?8.)

Brian Hayes

What does it mean that I put greater trust in the output of a computer program than in my own reasoning? Well, I am not arguing that computer simulations are superior to proofs or more reliable than deductive methods. It's not that there's something wrong with classical mathematics. All three of the approaches discussed in my pseudo-Socratic dialogue can be made to yield the right answer, if they are applied with care. But proof is a tool that can also prove you a fool.

For those who believe they could never possibly commit such an error, I offer my congratulations, along with a reminder of the infamous Monty Hall affair. In 1990 Marilyn vos Savant, a columnist for Parade magazine, discussed a hypothetical situation on the television game show "Let's Make a Deal," hosted by Monty Hall. A prize is hidden behind one of three doors. When a contestant chooses door 1, Hall opens door 3, showing that the prize is not there, and offers the player the option of switching to door 2. Vos Savant argued (correctly, given certain assumptions) that switching improves the odds from 1?3 to 2?3. Thousands disagreed, including more than a few mathematicians. Even Paul Erd?os, a formidable probabilist, got it wrong. It was a computer simulation that ultimately persuaded him.

The law seeks proof beyond a reasonable doubt, but mathematics sets a higher standard. In a tradition that goes back to Euclid, proof is taken as a guarantee of infallibility. It is the flaming sword of a sentry standing guard over the published literature of mathematics, barring all falsehoods. And the literature may need guarding. If you view mathematics as a formal system of axioms and theorems, then the structure is dangerously brittle. Admit just one false theorem and you can prove any absurdity you please.

The special status of mathematical truth, setting the discipline apart from other arts and sciences, is a notion still cherished by many mathematicians, but proof has other roles as well; it's not just a seal of approval. David Bressoud's book Proofs and Confirmations gives what I believe is the best-ever insider's account of what it's like to do mathematics. Bressoud emphasizes that the most important function of proof is not to establish that a proposition is true but to explain why it's true. "The search for proof is the first step in the search for understanding."

Brian Hayes

And of course there's more to mathematics than theorems and proofs. A genre calling itself experimental mathematics is thriving today. There are journals and conferences devoted to the theme, and a pair of books by Jonathan Borwein and David Bailey serve as a manifesto for the field. Not that practitioners of experimental math want to abandon or abolish proof, but they give greater scope to other activities: playing with examples, making conjectures, computation.

Still, there are ideas that never could have entered the human mind except through the reasoning process we call proof. Which brings me back to the trisection of angles.

The fact that trisection is impossible is common knowledge, but the reason it's impossible—the content of the proof—is not so widely known. Many authors mention it but few explain it. Even Underwood Dudley's splendid send-up of mathematical cranks, A Budget of Trisections, does not go through the proof step by step.

The origin and history of the proof are also somewhat shadowy. There is a lesson here for those who seek immortality by solving some trophy problem in mathematics. Trisection had been near the top of the most-wanted list for two millennia, and yet the author of the first published proof of impossibility has not earned a place in the pantheon.

That author was the French mathematician Pierre Laurent Wantzel (1814-1848), who is hardly a household name, even in mathematical households. His proof appeared in 1837. As far as I know, it has never been reprinted and has never been published in English translation. (I have posted a crude attempt at a translation on the American Scientist Web site.) Many citations of the paper give the wrong volume number, suggesting that even some of those who refer to the proof have not read it. And to pile on one further indignity, the paper itself gets the author's name wrong: He is listed as "M. L. Wantzel."

One reason for Wantzel's obscurity may be that his proof is almost unintelligible for modern readers. Later expositors offer more lucid accounts. Felix Klein, L. E. Dickson and Robert C. Yates all published versions of the proof; so did Willard Van Orman Quine, who wrote his version in response to a $100 challenge. For a thorough and accessible book-length exposition I highly recommend Abstract Algebra and Famous Impossibilities, by Arthur Jones, Sidney A. Morris and Kenneth R. Pearson.

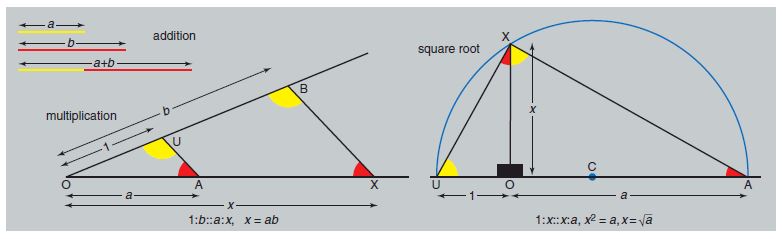

As penance for my youthful career as a trisector, I would now like to try giving a brief sketch of the impossibility proof. The basic question is: What can you do with a straightedge and compass? You can draw lines and circles, obviously, but it turns out you can also do arithmetic. If the length of a line segment represents a number, then ruler-and-compass manipulations can add, subtract, multiply and divide such numbers, and also extract square roots. Suppose you are given a segment of length 1 to start with; what further numbers can you generate? All the integers are easy to reach; you can also get to any rational number (a ratio of integers). Square roots give access to certain irrationals; by taking square roots of square roots, you can also do fourth roots, eighth roots, and so on. But that's all you can do. There is no way to extract cube roots, fifth roots or any other roots not a power of 2—which is the crucial issue for trisection.

A purported trisection procedure is required to take an angle qand produce q/3. Since the procedure has to work with any angle, we can refute it by exhibiting just one angle that cannot be trisected. The standard example is 60 degrees. Suppose the vertex of a 60-degree angle is at the origin, and one side corresponds to the positive x axis. Then to trisect the angle you must draw a line inclined by 20 degrees to the x axis and passing through the origin.

To draw any line, all you need is two points lying on the line. In this case you already have one point, namely the origin. Thus the entire task of trisection reduces to finding one more point lying somewhere along the 20-degree line. Surely that must be easy! After all, there are infinitely many points on the line and you only need one of them. But the proof says it can't be done.

To see the source of the difficulty we can turn to trigonometry. If we knew the sine and cosine of 20 degrees, the problem would be solved; we could simply construct the point x=cos20, y=sin20. (Of course we need the exact values; approximations from a calculator or a trig table won't help.) We do know the sine and cosine of 60 degrees: The values are √(3)/2 and 1/2. Both of these numbers can be constructed with ruler and compass. Furthermore, formulas relate the sine and cosine of any angle Θ the corresponding values for Θ/3. The formulas yield the following equation (where for brevity the symbol ureplaces the expression cosΘ/3):

cosΘ=4u 3-3u.

For the 60-degree angle, with cosΘ=1/2,the equation becomes 8u 3-6u=1.Note that this is a cubic equation. That's the nub of the problem: No process of adding, subtracting, multiplying, dividing and taking square roots will ever solve the equation for the value of u. (The hard part of the proof, which I'm not brave enough to attempt here, shows that the cubic equation cannot be reduced to one of lower degree.)

This proof, with its excursions into trigonometry and algebra, would have been alien to Euclid, but the conclusion is easily translated back into the language of geometry: Not a single point along the 20-degree line (except the origin) can be reached from the 60-degree line by ruler-and-compass methods. There is something hauntingly counterintuitive about this fact. The two lines live on the same plane; they even intersect, and yet they don't communicate. You can't get there from here. Like Hobbes, I wouldn't believe it, except the proof compels belief. I wonder if my old friend Dmytro would be convinced.

© Brian Hayes

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.