Magazine

November-December 2000

November-December 2000

Volume: 88 Number: 6

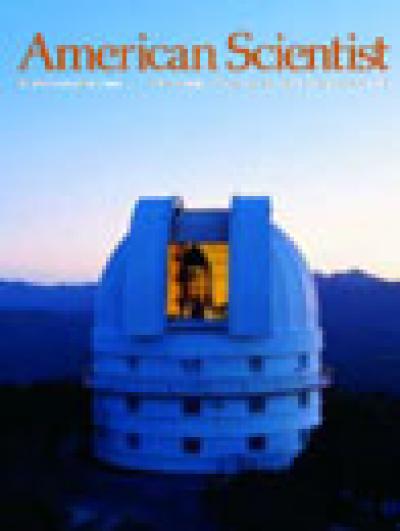

Sunset marks the beginning of an evening's observing session for the 2.1-meter Struve Telescope at the McDonald Observatory at the University of Texas. The Struve is part of a global network of modest-aperture instruments that make up the Whole Earth Telescope collaboration, which studies the remnants of Sun-like stars, known as white dwarfs. In "White Dwarf Stars," Steven Kawaler and Michael Dahlstrom discuss what astronomers have learned about the structure and internal composition of these stellar remnants through the new field of stellar seismology. (Photograph courtesy of McDonald Observatory/Martin Harris.)

In This Issue

- Agriculture

- Art

- Astronomy

- Biology

- Communications

- Computer

- Economics

- Engineering

- Environment

- Evolution

- Mathematics

- Physics

- Policy

- Psychology

- Technology

Scientific Publication Trends and the Developing World

Mercedes Maqueda, Eva Valdivia, Antonio Galvez

Communications

What can the volume and authorship of scientific articles tell us about scientific progress in various regions?

Gene Chips and Functional Genomics

Hisham Hamadeh, Cynthia Afshari

Biology Environment Technology

A new technology will allow environmental health scientists to track the expression of thousands of genes in a single, fast and easy test

White Dwarf Stars

Steven Daniel Kawaler, Michael Dahlstrom

Astronomy

The remnants of Sun-like stars, white dwarfs offer clues to the identity of dark matter and the age of our Galaxy

The Case of Agent Gorbachev

Kristie Macrakis

Anthropology Engineering Technology

East Germany acquired technology the old-fashioned way: by stealing it. But did it do their industrial enterprise any good?

Scientists' Nightstand

Symmetries Obeyed and Broken

Peter Pesic

Art Physics Review Scientists Nightstand

A review of Lucifer's Legacy: The Meaning of Asymmetry, by Frank Close and Antimatter: The Ultimate Mirror, by Gordon Fraser.