Flowers and Ribbons of Ice

By James Richard Carter

Beautiful, gravity-defying structures can form when water freezes under the right conditions.

Beautiful, gravity-defying structures can form when water freezes under the right conditions.

DOI: 10.1511/2013.104.360

Dragging yourself out of a warm bed in the early hours of a wintry morning to go for a hike in the woods: It’s not an easy thing for some to do, but the visual treasures that await could be well worth the effort. If the weather conditions and the local flora are just right, you might come across fleeting, delicate frozen formations sprouting from certain plant stems, literally a garden of ice.

Photograph courtesy of the author..

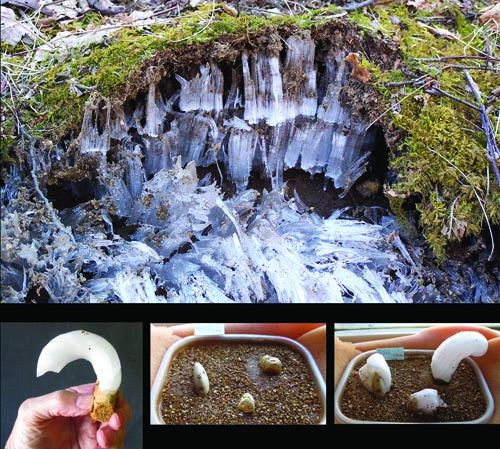

I first came across such natural ice sculptures in December 2003 while hiking in Tennessee. I couldn’t explain what I saw until I did a Web search, but a year later I found similar frozen formations, in a different area and associated with another plant. In November 2005 I drove through northern Kentucky on a frosty morning, alert to the possibility of additional sightings. My first one turned out to be a misperception—just plastic garbage bags—but the second proved to be genuine ice on plant stems. This time I saw it along the side of the road, where the plants had been mowed. Swirling formations of ice radiated out in all directions from the cut, vertical stems. I continued to see these features for the next few hours as I drove south through Kentucky, indicating that the whole area had the necessary species of plant and the right combination of temperatures and moisture. I kept exploring to learn more about the exact factors necessary to create these visual wonders. A few days later in central Virginia, on a subfreezing morning following a day of rain, I ventured out to look for more of the ice blooms. Along a dirt road I found them in three forms: ribbons of ice at the base of small plant stems, needles of ice pushing up a thin layer of soil, and a rod of ice extending up about 5 centimeters from the ground. The next year I went back to the location of my first sighting in Tennessee and gathered seeds from one of the ice-producing plants, called white crownbeard (Verbesinia virginica), to take back to my yard in Illinois. The following winter I had ice flowers of my own, making it much easier to observe the formation process and begin decoding the conditions behind it.

As I attempted to crack the secrets of the ice flowers, I had some historical records to draw on. Several of the plants that produce ice flowers are known by common names such as frostweed or frost-plant, indicating that their unusual winter “blooms” have long been known. Published accounts date back to the early 1800s.

One notable report was written by Sir John F. W. Herschel, son of the astronomer who discovered the planet Uranus and an accomplished scientist in his own right. In 1833 he published an illustrated paper in the London and Edinburgh Philosophical Magazine, in which he wrote that the ice “seemed to emanate in a kind of riband- or frill-shaped wavy excrescence—as if protruded in a soft state from the interior of the stem, from longitudinal fissures in its sides, . . . the structure of the ribands was fibrous, like that of the fibrous variety of gypsum, presenting a glossy silky surface.”

Photographs courtesy of the author.

Herschel’s observations seemed to set off a series of related studies. Among them, in an 1850 issue of the same magazine that Herschel published in, physician and naturalist John LeConte of the University of Georgia made many insightful observations about whole and cut-off stems, both of which grew ice. He noted that many plant stems were dead and dry at the time of year when he did his study, although the roots might have been alive, but the ice formation therefore didn’t seem to be connected to the plant’s physiological functions. He observed, “At a distance they present an appearance resembling locks of cotton-wool, varying from four to five inches in diameter, placed around the roots of plants; and when numerous the effect is striking and beautiful.”

Photograph courtesy of the author.

In 1892, American naturalist William Hamilton Gibson wrote about ice “glistening like specks of white quartz down among the blown herbage close to the base of the stem. It is a flower of ice crystal of purest white which shoots from the stem, bursting the bark asunder, and fashioned into all sorts of whimsical feathery curls and flanges and ridges.” He noted that the size of the formations seemed to be larger than the amount of sap a plant could produce, indicating that moisture from the soil must be involved.

A number of other researchers from that era looked into the aspects of plant physiology related to the production of ice flowers. One of the more thorough investigations came from William Coblentz, a physicist at the National Bureau of Standards in Washington, DC. In 1914 Coblentz cut off stems of plants and inserted them in moist soil in his lab to prove that roots weren’t necessary to form the ice curls, and that the ice didn’t form from water vapor condensing out of the air (as is the case for frost).

A century later, ice flowers remain something of an enigma. There isn’t even a consensus about what to call them. Ice flowers are also commonly known as ice fringes, ice filaments, rabbit ice, and frost flowers. That last one is a bit of a misnomer because they do not form in the same way as frost. Bob Harms of the University of Texas at Austin’s Plant Resources Center has proposed the name crystallofolia (Latin for “ice leaves”) to describe these unusual formations. (Similarly odd ice structures can sometimes occur on rocks or from pipes; see more about these phenomena on the next pages.)

Photograph courtesy of bobbi fabellano..

Once I began growing my own ice flowers, I wanted to learn all I could about the phenomenon. There has been little formal study of the process, but by gathering together what is known, a picture is starting to emerge. Not all plants will produce ice. I’ve compiled verifiable reports of about 40 species worldwide that are known ice-flower producers. All appear to be herbaceous species, meaning they lack woody stems and their leaves and shoots die back at the end of their growing season. Although most ice flowers are associated with dead stems, some can occur on green stems near the end of the growing season when the temperatures are right. In addition, another ice formation, called hair ice, grows only from dead wood (see figure at above).

To add some clarity, Harms made detailed observations of plants that produce ice flowers. He notes that their stems all contain pronounced xylem rays, vessels that transport sap from the center to the periphery of the stalk. Because ice flowers grow perpendicular to the stem, it seems likely that these vessels are their fluid source. Harms suggests that non–ice producing plants may have less developed xylem rays. But he has struggled to identify the exact range of pore spacing and water permeability that enables certain plant stems to turn water into these specific shapes of ice.

Photographs courtesy of the author.

Once the water emerges from the stem, on the other hand, the process that produces the ice growth is now known: ice segregation, whereby water moves through a medium (be it a plant stem, piece of wood, soil, or rock) and freezes at a colder surface. In 1989, Hisashi Ozawa and Seiiti Kinosita of Hokkaido University demonstrated this process by placing an ice crystal on top of a microporous filter, which in turn was overlying a pool of water that was supercooled. (A supercooled liquid is one in which the temperature is below freezing but the liquid cannot solidify without some kind of imperfection, or nucleation site, such as a speck of dust or an ice crystal, to seed the process.) The filter pores were so small that ice could not move through them, but water could. As it flowed to the surface, the water froze to the base of the ice, added to it and pushed it upward, and in the process released latent heat from crystallization. That warmth kept the water below from freezing, allowing the cycle to continue for as long as the water supply was available.

In the case of ice flowers, similar conditions most commonly emerge overnight, as the air temperature falls below freezing while the moisture in the ground remains unfrozen. But I have seen ice start to grow on plant stems in mid-afternoon when cold air flows into the area. Once the soil freezes to some depth these processes cease, but when thawing occurs they may be renewed. In the middle latitudes, the right conditions for ice flowers can happen any time between mid-fall and early spring.

I am still pleasantly surprised that something so common as ice can have forms that remain so rarely seen and incompletely understood. Everyday water takes on many strange shapes. Now you have more to look for when it gets cold.

In addition to ice flowers, I’ve spotted small needles and rods of ice. Scientific publications on needle ice, which appears on the ground pushing up thin layers of soil, also date back to the mid-1800s. Again, the phenomenon of ice segregation is at work here, so the underlying medium must have enough pore space to conduct water upward to supply the growth of ice, but must be small enough that capillary action can overcome gravity and wick water upward toward the surface. A 1981 study by Vernon Meentemeyer at the University of Georgia and Jeffrey Zippin of the U.S. Corps of Engineers noted that the soil must contain significant clay and silt to have the right porosity for needles to form. The image at top right shows needle ice that developed below moss-covered sod in Virginia. It appears that the needle ice grew over two days, with a visible boundary separating the formation between nights.

Photograph at top courtesy of Susan Wells Rollinson. Photographs at bottom courtesy of the author.

Rods of ice grow from pebbles instead of soil. They can reach several centimeters in length, and sometimes pick up other small pebbles as they extend. I was sent photos by ice enthusiasts showing ice growing from small rocks, and subsequently they sent me representative pebbles. I placed the pebbles on an area of bare soil that had previously produced needle ice. A cap of ice soon grew on one rock. The next day it partially melted. Then it grew new ice the following night, pushing the old ice up and demonstrating multi-day growth and interruption of ice.

These rocks are sedimendary, including some cherts, which are based on silica. Some brick and pottery pieces can also grow ice caps. Pebbles that produce sizeable growths likely must have sufficient porosity and permeability. I test pebbles based on the amount of water they wick up when placed on a wet sponge for 15 seconds: If the pebble gains significant weight during that time, I’ve found it will likely produce ice. Very few rocks exhibit this wicking.

Based on these experiences, I next tried to create pebble ice in a freezer, using a setup that anyone could recreate at home. I used a small picnic ice chest with an adjustable light bulb as a heat source at the base, overlaid by an aluminum sheet to disperse the warmth. Above the bulb I placed a plastic bucket filled with wet sand, surrounded by insulation, and positioned wet pebbles in the sand. I controlled the light bulb to balance temperatures so that the top of the sand stayed unfrozen, but rocks extending above the surface are likely to form ice in the freezing air.

If I get all the conditions just right, ice may grow on the sides or top of a rock for as long as moisture is available (before and after freezing images above, at bottom middle and right). Sometimes the rods are quite spectacular (see image above at bottom left). Ice may grow from the sides of rocks, even forming a collar or tube, if the freezing plane (the location where temperatures are optimal for ice growth) is below the top of the rock. When I replace the sand with heavier soil that has a high clay content, the same setup grows needle ice from the soil that pushes the pebbles upward.

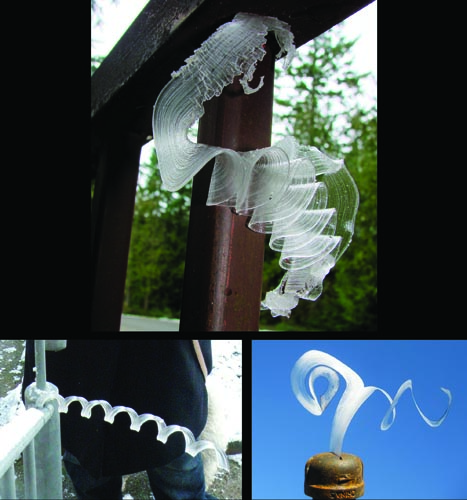

Curious observers from around the world have sent me photographs of spectacular spirals and ribbons of ice that have naturally extruded from metal pipes in various locations (as shown at right, top and bottom left). To explain how these shapes occur, I took up the task of trying to replicate the process and growing my own ice spirals, and I’ve developed a procedure that others can follow.

Ice occupies about nine percent more volume than water, so with freezing there will be expansion. My first attempt at deliberately reproducing this phenomenon used plastic pipes that were on hand, but they soon shattered. However, black iron pipes are sometimes able to withstand the pressure.

Photograph at top courtesy of Sheryl Terris. Photograph at bottom left courtesy of Raul Baz. Photograph at bottom right courtesy of the author.

My tests showed that one end of the pipe must be flattened, or capped and cut with a small hole or slit, to force the ice out in dynamic shapes. The most successful method I’ve developed is to temporarily close off this narrowed end of the pipe and hold it upside down, then fill that end with a small amount of water and allow it to freeze, which forms a plug of ice. I then fill the rest of the pipe with chilled water (but not supercooled water, or it will freeze on contact) and cap the other end. I flip the pipe upright, so the slit and ice plug is at the top, and set it outside on a freezing night. With luck, as the chilled water in the pipe expands, it will force the plug of ice out the narrowed end or slit and create long curls (as shown above on bottom right).

These experiments suggest that natural ice ribbons from pipes and fences develop over two or more days. On the first night when temperatures drop below 0 degrees Celsius, water in the pipe freezes. The next day, some ice melts while the remainder floats to the top, creating a plug of ice. When temperatures drop again the next night, the water chills and expands, pushing the ice plug out of any available holes. I wonder how many other dramatic examples of ice extrusions have never been seen because they have appeared in out the way places on cold mornings.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.