Trust and Bias in Robots

By Ayanna Howard, Jason Borenstein

These elements of artificial intelligence present ethical challenges, which scientists are trying to solve.

These elements of artificial intelligence present ethical challenges, which scientists are trying to solve.

Suppose you are walking on a sidewalk and are nearing an intersection. A flashing sign indicates that you can cross the street. To cross safely, you have to rely on nearby cars to respect the rules of the road. Yet what should happen when pedestrians (and others) are interacting with a self-driving car? Should we, as pedestrians, trust that this car, without a human behind the wheel, will stop when we enter a crosswalk? Are we willing to stake our lives on it?

Robots—whether on the road, such as in the case of self-driving cars, or working in buildings, such as in the case of emergency evacuation robots—are influencing human decisionmaking processes. Yet whether placing trust in the technology is warranted, especially when it can mean life or death, is an open question. For example, are a self-driving car’s sensors trained on enough representative data to be able to evaluate when an adult versus a child walks out between two cars into the street?

Carsten Rehder/picture-alliance/dpa/AP Images

As robots more fully interact with humans, the role of human-robot trust and of biases integrated into the technology must be carefully investigated. The focus on trust emerges because research shows that humans tend to trust robots similarly to the way they trust other humans; thus, the concern is that people may underappreciate or misunderstand the risk associated with handing over decisions to a robot. For example, if a robotic hospital assistant is supposed to serve a drink but it cannot pick up a particular type of cup, the result may be an inconvenience for the patient receiving the drink. Or in the case of an autonomous vehicle, misplaced trust (assuming the car will stop) could be deadly for those inside and outside of the vehicle.

Inappropriate calibration of trust in intelligent agents is a serious problem, and when conjoined with bias, the potential for harm greatly intensifies. Bias involves the disposition to allow our thoughts and behaviors, often implicitly or subconsciously, to be affected by our generalizations or stereotypes about others. For example, depending on the cultural biases of the algorithm, self-driving cars might be more inclined to save younger rather than older pedestrians in cases where an accident cannot be avoided. While bias is not always problematic, it can frequently lead to unfairness or other negative consequences. As the artificial intelligence (AI) and robotics community builds its technologies, algorithmic bias is an emerging ethical challenge.

These biases can influence our relationships with social robots based on our own gender, ethnicity, and age stereotypes. For example, research has shown there are differences in accuracy when these systems try to recognize darker skin tones or different dialects. Arguably, an occasional mistake when interacting with a customer-service chatbot might not pose a major harm. Yet the harms may amplify when dealing with medical transcription chatbots or border surveillance applications. Many entities, including government agencies, are talking about placing profound trust in computing technology. Yet biases built into the technology have a propensity to shape perceptions of the truth.

In our own research, we frame issues of trust and bias in robotic technology through the lens of two human-robot interaction domains: healthcare and autonomous vehicles. Because these domains reflect common threads of building on norms found in human–human interpersonal interaction and represent market areas of widespread adoption, they can shed light on ethical concerns that may emerge in scenarios where robots interact with humans more broadly. We also explore measures, such as AI that explains its decision-making processes, that seek to address concerns about overtrust and bias.

Many different types of robots are making their way into healthcare environments. Telepresence robots are also starting to be used for patient care. Over recent years, socially assistive robots for improving the rehabilitation outcomes of individuals with disabilities or impairments are gaining acceptance as a treatment option. For example, through interactive play, socially interactive humanoid robots have been used to help children with cerebral palsy perform physical exercises. A key attribute of the effectiveness of such interventions is derived from designing robot behaviors that increase a patient’s engagement, and thus compliance with therapeutic objectives.

Although patients are experiencing important benefits from robotic technology, the healthcare realm can highlight the phenomenon of intertwined trust and bias. When patients interact with a physician, they may be reluctant to question the physician’s authority; similarly, patients may be averse to questioning the guidance of their assistive robot. Children and older adults with disabilities might be particularly susceptible to aspects of overtrust in social bonding situations with robots, because these populations may look to their robots to produce viable solutions that enhance their quality of life.

Our own research shows that trust and compliance increase when participants interact with robot therapy partners rather than with a human therapist. In these therapy exercise scenarios, we show that participants are not only compliant with respect to the exercise guidance provided by their robot therapy partners, but there is a trend of higher self-reported trust. Healthcare professionals and other adults also tend to overtrust robotic systems. Because of the human inclination to rely on computing devices, even trained professionals may defer to a robot when, for instance, deciding whether a rehabilitation exercise should continue, when they should not necessarily be doing so.

Inappropriate calibration of trust in computing devices is a serious problem, and when conjoined with bias, risks of harm greatly intensifies.

The algorithms encoded into robots are often designed based on learned data from human experts, such as from a collection of physical therapists. Data, such as what may be used as a basis to classify the emotional state of a child or to make recommendations based on an individual’s gender, might be a well-intended foundation for a robot’s decision-making. Yet the data set could be misinterpreted or not truly representative of reality. For example, if a rehabilitative robot is interacting with a female child, its training data set might indicate that female patients do not like video games and thus remove from consideration the use of such therapy. The robot’s decision is especially problematic given that an unintended, and potentially harmful, consequence could be adherence to robot guidance that is based on historical biases.

A robot might perpetuate a misguided stereotype about a patient merely because that person resembles other patients in some ways. For instance, the training data might conclude that robots shouldn’t be emotionally engaging with boys because some studies show that girls respond better to emotional stimuli from robots than boys do. Yet rather than categorically deciding that video game therapy or emotional stimuli should not be tried when interacting with a specific gender, the robot could instead be programmed to evaluate which type of video games or emotional behaviors to deliver.

Many factors can compromise the quality of data on which robots rely. Suppose the training data set comprises the decisions made by radiologists about how to read an MRI; the relevant diagnoses can be infused with bias because radiologists are human beings and have biases like the rest of us. Confirmation bias can, for instance, interfere with the evaluation of whether a patient has a brain tumor if it is assumed that the condition exists prior to looking at the patient’s MRI.

The propensity for trust and the potential of bias may have a direct impact on the overall quality of healthcare provided to each patient. As a result, measures need to be put in place to address the issue; such approaches could include developing large data sets that represent various diverse populations and having the AI systems monitor their own outputs and analyze various “what-if scenarios” to ensure the accuracy of results across these populations. Moreover, regulators and other entities could refine data quality standards, and revisit the tenets of the informed consent process with the goal of making AI technologies less opaque to patients.

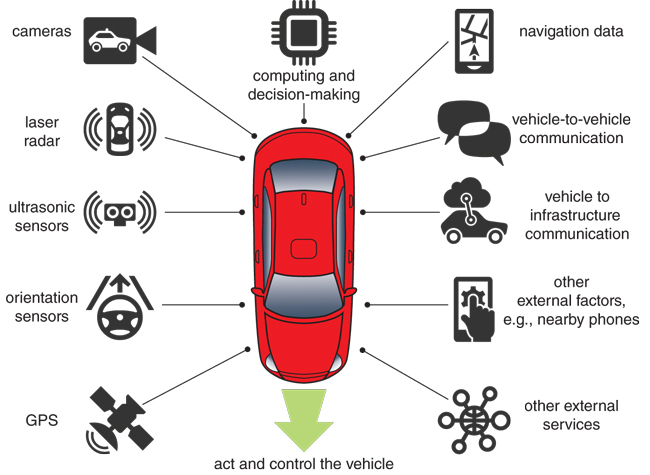

Another realm that highlights the phenomenon of intertwined trust and bias is self-driving cars. These vehicles, with different levels of driving autonomy, are being brought to market by a variety of companies, including traditional automobile manufacturers and those new to the vehicle space. Given that a significant percentage of those who report difficulties accessing transportation are individuals with disabilities, many envision a range of potential benefits emerging from the autonomous vehicle technology, which will increase mobility for those with physical disabilities or impairments. Yet for the near future, humans will not just be passengers in self-driving cars; the technology will require that humans are ready to take over driving tasks and intervene during at least some critical situations where safety is in jeopardy.

Andriy Popov/Alamy Stock Photo

At least for certain types of autonomous vehicles, the design of the autopilot and its interface assumes that the humans riding in the cars are paying sufficient attention, have enough time, and know how to act when it is required of them. Unfortunately, tragic cases have already occurred where human drivers were not fully prepared to take over an autonomous vehicle’s operation. In two reported cases, the drivers were fatally injured; in another case, a pedestrian was killed when struck by an autonomous vehicle. Research in the autonomous vehicle domain has shown that when humans are situated in a simulated driving scenario, they tend to defer to an automated system.

Couple these findings with the algorithms in autonomous vehicles that are designed to brake for hazards. The automated systems must have the capability of deciphering vague or ambiguous data—is that sunlight reflecting from the road, sensor noise, or a small child? Again, determining how best to construct these algorithms and weighing the resulting decisions ultimately gives rise to another aspect of algorithmic bias. Briefly stated, the algorithms might incorrectly interpret a situation because its decision is based on flawed or omitted data. One of the primary information sources that feeds the intelligence of many autonomous vehicles is computer vision algorithms. They allow the system to see and interpret its surroundings— whether that includes the road, other cars, or even pedestrians. Computer vision algorithms have their own inherent biases, though, especially when it comes to interpretation. For example, various facial recognition systems struggle with identifying non-Caucasian faces with the same rate of accuracy. Vision systems can also be “tricked” into seeing things that are not there.

Barbara Aulicino/icons Yurly Bucharskly/iStock

Concerns about trust and bias become more salient when we remind ourselves that autonomous vehicles might be given the responsibility to make life-and-death decisions; such decisions affect not only those in the cars but also pedestrians and others around the vehicles. Part of the solution is making an autonomous vehicle’s decision-making process more transparent and less of a “black box.” AI systems should express their decisions in a way that is meaningful to users, similar to how robots can learn from naturally expressed human instruction. For instance, if a self-driving car has difficulty interpreting ambiguous data (is that sunlight or a person?), the system could inform the user of its uncertainty. Currently, even designers can sometimes struggle to predict an autonomous vehicle’s behaviors. Also, driving is, by definition, a social activity as it involves a range of communication signals from hand gestures and honking to unspoken rules, which can vary by country, state, and even by city. Along with the ability to interpret such signals, achieving the goal of transparency may entail that autonomous vehicles have the ability to communicate their intent effectively to passengers and others on the road. Otherwise, the technology’s use may pose a troubling level of risk.

Preventing overtrust of robots—the complete faith put in self-driving cars, for example—or other computing devices may require that people using these intelligent agents have a fuller understanding of how the technology functions and what its associated limitations are. This mitigation could entail the use of warnings that resonate with users, such as a rehabilitative robot saying “please contact your physician; injury may result if you continue.”

As is already well known, overly legalistic and technical jargon is not a guaranteed means for ensuring that someone adequately comprehends the associated risks of using a technology. Transparency and clarity are important goals to uphold, especially when people may be interacting with a device that can affect their safety. For example, research has shown that having an AI system say it “doesn’t know” in cases, for example, when information is incomplete, can enhance user safety in safety-critical settings.

Regarding the issue of bias, research of generally accepted rules, or standards, needs to be put in place to improve the quality of the data used to “teach” algorithms. For example, the Toronto Declaration is a document created by Amnesty International and Access Now that seeks to influence the design of machine-learning algorithms. It provides the general recommendation that we avoid “existing patterns of structural discrimination” against, for example, race and gender when AI systems are released into the world. Some companies have indicated that government regulations might be needed, whereas some professional organizations have suggested guidelines for developers (such as those by the Institute of Electrical and Electronics Engineers) to ensure that AI systems are more directly “aligned” with human well-being and perhaps the Universal Declaration of Human Rights. Yet how standards for AI systems should be promulgated and enforced are still open questions.

Georgia Tech College of Computing

One promising strategy for mitigating overtrust and bias is “explainable AI,” in which there would be some level of transparency about how AI makes a decision. The European Commission, for example, is advocating for increased funding for explainable AI research. Right now, few AI algorithms have been designed to fully explain their decisions to humans in a language that is understandable to them. For example, in healthcare, by explaining the reasoning behind a patient’s likelihood of readmission to the hospital, physicians can have a fuller basis for accepting or rejecting predictions and recommendations. In the self-driving car scenario, though, the process of when and how the system should explain itself must be considered more carefully. If a system provides an explanation a split-second before a decision is made and seeks to hand off control to the user, this approach could actually increase, rather than mitigate, harm. The implementation of a similar “explanation” standard for all AI could be considered. If designers of intelligent agents do not fully know how their creations function, then how would users be able to understand these agents?

The problems of bias and overtrust are interconnected and could exacerbate one another, but research aimed at understanding these challenges is just starting to gain traction. Both individually and collectively, we need to develop strategies for mitigating the potential negative effects that robots and other intelligent agents have on individual persons and strengthen their capacity to enhance everyone’s quality of life. These strategies could include developing data quality standards for robots and encouraging expertise from a broader base of disciplines included in design teams. We, as users and developers of robotic technology, must be more proactive about identifying ethical issues emerging from the design pathways we pursue and also about calibrating the trust that we place in the technology.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.