This Article From Issue

November-December 2007

Volume 95, Number 6

Page 544

DOI: 10.1511/2007.68.544

Why Beauty is Truth: A History of Symmetry. Ian Stewart. xiv + 290 pp. Basic Books, 2007. $26.95.

Symmetry is a fundamental concept pervading both science and culture. In popular terms, symmetry is often viewed as a kind of "balance," as when Doris Day's character in the 1951 movie On Moonlight Bay insists that if her beau kisses her on the right cheek, then he should kiss her on the left cheek too. But in mathematics, symmetry has been given a more precise meaning. In his new history of mathematical symmetry, Why Beauty Is Truth, Ian Stewart gives this definition: "A symmetry of some mathematical object is a transformation that preserves the object's structure." So a symmetrical structure looks the same before and after you do something to it. A butterfly looks the same as its mirror image. The (idealized) wheel of a car may look the same after being rotated on its axle by 90 degrees (or possibly by 72 or 120 degrees, depending on the particular design).

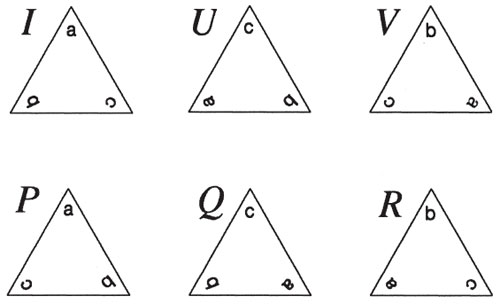

From Why Beauty Is Truth.

Although mathematical symmetry may bring to mind a regular polygon or other geometric pattern, its roots (pun unavoidable) lie in algebra, in the solutions to polynomial equations. Thus Stewart begins his account in ancient Babylon with the solution to quadratic equations. The familiar quadratic formula gives the two roots of the degree-two polynomial equation ax 2 + bx + c = 0. The Babylonians didn't have the algebraic notation to write down such a formula, but they had a recipe that was equivalent to it.

By the 18th century, mathematics and mathematical notation had matured to the point of finding explicit formulas for the roots of general polynomials of degree three (cubic) and degree four (quartic). The formulas look complicated, but they are made of simple building blocks: addition, subtraction, multiplication, division and radicals—such as square roots, cube roots, fourth roots and so on. The existence of such formulas is summarized by the statement that all polynomials of degree four or less are solvable by radicals.

For polynomials of degree five (quintic) and higher, no such formula has been found, because none exists: Although some fifth-degree polynomials are solvable by radicals, other fifth-degree polynomials are not. This was proved by Niels Henrik Abel in 1823, although nearly correct proofs were proposed as far back as 1799. These days, the result is not considered surprising: The requirements for an equation to be "solvable by radicals" are very restrictive. With such a small vocabulary of operations, one would expect that most interesting numbers cannot be written in that form.

But Abel's proof was not the end of the story. A mystery remained: What distinguishes those polynomials of degree five that are solvable? The answer was determined in 1832 by Évariste Galois, who invented group theory to find it.

The official mathematical definition of a group does not explicitly mention symmetries; it concerns a set of elements that can be combined in pairs to form another element of the set, and certain axioms have to be satisfied. The precise definition of a group is not important for the purpose of this review; also, Why Beauty Is Truth does not provide one. Suffice it to say that the mathematical concept of a group captures the essence of symmetry in abstract terms. The focus is on the operation that reveals the symmetry. The collection of symmetries of any object is a group, and every group is the collection of symmetries of some object.

The symmetric objects of interest to mathematicians are not physical objects but mathematical entities. In Galois's study of polynomials, the object was the set of roots of the polynomial. For polynomials of degree five, this is just a list of five numbers. And for Galois, the operation was rearranging the list of roots.

Galois's great achievement was discovering that there are several different possibilities for which rearrangements of the set of roots can occur and that the specific collection of rearrangements determines whether or not the roots of the polynomial can be expressed as a simple formula involving square roots, cube roots and so on. Every algebraic equation has a symmetry group, called its Galois group, with an abstract structure that determines whether the roots of the polynomial can be expressed in terms of radicals. The Galois group is able tell you that the result can be expressed as a finite formula involving radicals, but it does not actually provide the formula. Today there are computer programs that can compute the Galois group of a polynomial; they can also tell you a formula for the roots—if the polynomial is solvable by radicals.

The first half of Stewart's book takes us up to the time of Galois's work. After Galois, group theory became its own separate mathematical subject, and today only a small part of group theory concerns the Galois groups of polynomials. But there are still some major unsolved problems. It is not known, for example, whether every finite group can occur as the Galois group of a polynomial with integer coefficients.

Group theory is diverse. Stewart makes the case that symmetry, as captured by group theory, is all-pervasive and that much of our physical reality has group theory as its underlying explanation. Because he focuses on the subject as it applies to relativity and string theory, Stewart misses an opportunity to explain this connection in those areas of physics that most of his readers are likely to understand.

In 1915, Emmy Noether proved that all conservation laws arise from a symmetry of the physical system. For example, conservation of momentum is a consequence of the fact that the laws of physics are the same everywhere. That is, Newton's laws are invariant under translation. Physics students use this principle every time they analyze a collision by switching to the center-of-mass reference frame. Another example is conservation of energy, which is a consequence of the fact that Newton's laws look the same with time running in reverse. Stewart gives Noether's theorem short shrift: one sentence on page 165.

Of course, modern physics provides wonderful examples of the power of symmetry. Special relativity is founded on the postulates that the laws of physics are the same in all inertial reference frames and the speed of light is the same for all observers. Both of those assumptions are symmetries in the mathematical sense of showing "invariance under an operation." Elementary particle physics and string theory rely on group theory more explicitly, with gauge groups such as SO(2) and SU(3). Stewart does not fully describe those groups in his book, although he does try to give a sense of the subject.

Why Beauty Is Truth is more about history than about mathematics, with a focus on the people who did the math. Stewart weaves in short biographies, which often begin with the mathematician's parents. We learn of their occupations and the career they planned for their child (usually not mathematics), and we also read about the mathematician's foibles and the quirks of his or her spouse. The various formulas, graphs and diagrams make it clear that this is a book about mathematics—not a math book. And that is exactly right: You won't really learn much math by reading it. It does, however, tell a reasonably entertaining story about the history of symmetry.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.