Moonshot Computing

By Brian Hayes

Getting to the Moon required daring programmers as well as daring astronauts.

Getting to the Moon required daring programmers as well as daring astronauts.

Fifty years ago, three astronauts and two digital computers took off for the Moon. A few days later, half a billion earthlings watched murky television images of Neil Armstrong and Buzz Aldrin clambering out of the Apollo 11 lunar module and leaving the first human bootprints in the powdery soil of the Sea of Tranquility. (Michael Collins, the command module pilot, remained in lunar orbit.) The astronauts became instant celebrities. The computers that helped guide and control the spacecraft earned fame only in a smaller community of technophiles. Yet Armstrong’s small step for a man also marked a giant leap for digital computing technology.

Wikimedia Commons

Looking back from the 21st century, when everything is computer controlled, it’s hard to appreciate the audacity of NASA’s decision to put a computer aboard the Apollo spacecraft. Computers then were bulky, balky, and power hungry. The Apollo Guidance Computer (AGC) had to fit in a compartment smaller than a carry-on bag and could draw no more power than a light bulb. And it had to be utterly reliable; a malfunction could put lives in jeopardy.

Although the AGC is not as famous as the astronauts, its role in the Apollo project has been thoroughly documented. At least five books tell the story, and there is more information on the web. Among all the available resources, one trove of historical documents offers a particularly direct and intimate look inside this novel computer. Working from rare surviving printouts, volunteer enthusiasts have transcribed several versions of the AGC software and published them online. You can read through the programs that guided Apollo 11 to its lunar touchdown. You can even run those programs on a “virtual AGC.”

Admittedly, long lists of machine instructions, written in an esoteric and antiquated programming language, do not make easy reading. Deciphering even small fragments of the programs can be quite an arduous task. The reward is seeing firsthand how the designers worked through some tricky problems that even today remain a challenge in software engineering. Furthermore, although the documents are technical, they have a powerful human resonance, offering glimpses of the cultural milieu of a high-profile, high-risk, high-stress engineering project.

Each Apollo mission to the Moon carried two AGCs, one in the command module and the other in the lunar module. In their hardware the two machines were nearly identical; software tailored them to their distinctive functions.

For a taste of what the computers were asked to accomplish, consider the workload of the lunar module’s AGC during a critical phase of the flight—the powered descent to the Moon’s surface. The first task was navigation: measuring the craft’s position, velocity, and orientation, then plotting a trajectory to the target landing site. Data came from the gyroscopes and accelerometers of an inertial guidance system, supplemented in the later stages of the descent by readings from a radar altimeter that bounced signals off the Moon’s surface.

After calculating the desired trajectory, the AGC had to swivel the nozzle of the rocket engine to keep the capsule on course. At the same time it had to adjust the magnitude of the thrust to maintain the proper descent velocity. These guidance and control tasks were particularly challenging because the module’s mass and center of gravity changed as fuel was consumed and because a spacecraft sitting atop a plume of rocket exhaust is fundamentally unstable—like a broomstick balanced upright on the palm of your hand.

Along with the primary tasks of navigation, guidance, and control, the AGC also had to update instrument displays in the cockpit, respond to commands from the astronauts, and manage data communications with ground stations. Such multitasking is routine in computer systems today. Your laptop runs dozens of programs at once. In the early 1960s, however, the tools and techniques for creating an interactive, “real-time” computing environment were in a primitive state.

The AGC was created at the Instrumentation Laboratory of the Massachusetts Institute of Technology (MIT), founded by Charles Stark Draper, a pioneer of inertial guidance. Although the Draper lab had designed digital electronics for ballistic missiles, the AGC was its first fully programmable digital computer.

For the hardware engineers, the challenge was to build a machine of adequate performance while staying within a tight budget for weight, volume, and power consumption. They adopted a novel technology: the silicon integrated circuit. Each lunar-mission computer had some 2,800 silicon chips, with six transistors per chip.

For memory, the designers turned to magnetic cores—tiny ferrite toroids that can be magnetized in either of two directions to represent a binary 1 or 0. Most of the information to be stored consisted of programs that would never be changed during a mission, so many of the cores were wired in a read-only configuration, with the memory’s content fixed at the time of manufacture.

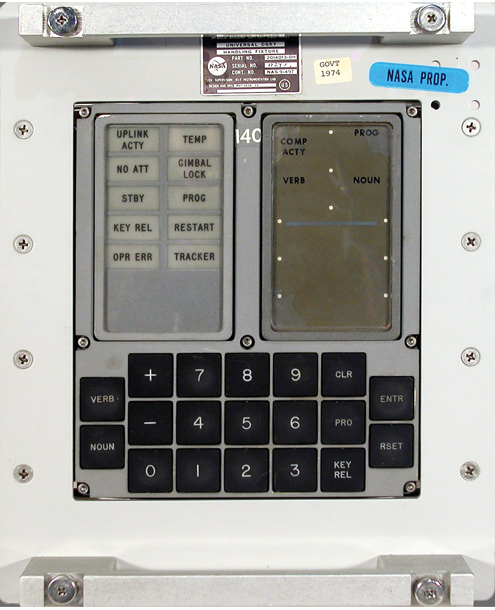

The logic circuits and memory cores were sealed in a metal case tucked away in an equipment bay. The astronauts interacted with the computer through a device called the DSKY (short for “display keyboard” and pronounced dis-key), which looked something like the control panel of a microwave oven. It had a numeric keypad, several other buttons, and room to display 21 bright green decimal digits.

A critical early decision in the design of the computer was setting the number of bits making up a single “word” of information. Wider words allow more varied program instructions and greater mathematical precision, but they also require more space, weight, and power. The AGC designers chose a width of 16 bits, with one bit dedicated to error checking, so only 15 bits were available to represent data, addresses, or instructions. (Modern computers have 32- or 64-bit words.)

A 15-bit word can accommodate 215=32,768 distinct bit patterns. In the case of numeric data, the AGC generally interpreted these patterns as numbers in the range ±16,383. Grouping together two words produced a double-precision number in the range ±268,435,455.

A word could also represent an instruction in a program. In the original plan for the AGC, the first three bits of an instruction word specified an “opcode,” or command; the remaining 12 bits held an address in the computer’s memory. Depending on the context, the address might point to data needed in a calculation or to the location of the next instruction to be executed.

Allocating just three bits to the opcode meant there could be only eight distinct commands (the eight binary patterns between 000 and 111). The 12-bit addresses limited the number of memory words to 4,096 (or 212). As the Apollo mission evolved, these constraints began to pinch, and engineers found ways to evade them. They organized the memory into multiple banks; an address specified position within a bank, and separate registers indicated which bank was active. The designers also scrounged a few extra bits to expand the set of opcodes from 8 to 34.

The version of the AGC that went to the Moon had 36,864 words of read-only memory for storing programs and 2,048 words of read-write memory for ongoing computations. The total is equivalent to about 70 kilobytes. A modern laptop has 100,000 times as much memory. As for speed, the AGC could execute about 40,000 instructions per second; a laptop might do 10 billion.

A no-frills architecture, puny memory, and a minimalist instruction set presented a challenge to the programmers. Moreover, the software team at MIT had to create not only the programs that would run during the mission but also a great deal of infrastructure to support the development process.

One vital tool was an assembler, a program that converts symbolic instructions (such as AD for add and TC for transfer control) into the binary codes recognized by the AGC hardware. The assembler’s primary author was Hugh Blair-Smith, an engineer with extensive background in programming the large computers of that era. The assembler ran on such a mainframe machine, not on the AGC itself. All of the flight-control programs were assembled and committed to read-only memory long before launch, so there was no need to have an assembler on the spacecraft.

A digital simulation of the AGC also ran on a mainframe computer. It allowed programs to be tested before the AGC hardware was ready. Later a “hybrid” simulator incorporated a real AGC and DSKY, as well as both analog and digital models of the rest of the spacecraft and its environment.

Another tool was an interpreter for a higher-level programming language, designed by J. Halcombe Laning and written mainly by Charles A. Muntz, both on the MIT team. The interpreted language provided access to mathematical concepts beyond basic arithmetic, such as matrices (useful in expressing control laws) and trigonometric functions (essential in navigation). The price paid for these conveniences was a tenfold slowdown. Interpreted commands and assembly language could be freely mixed, however, so the programmer could trade speed for mathematical versatility as needed.

An AGC program called the Executive served as a miniature operating system. Also designed by Laning, it maintained a list of programs waiting their turn to execute, sorted according to their priority. The computer also had a system of interrupts, allowing it to respond to external events. And a few small but urgent tasks were allowed to “steal” a memory cycle without other programs even taking notice. This facility was used to count streams of pulses from the inertial guidance system and from radars.

When I first tried reading some AGC programs, I found them inscrutable. It wasn’t just the terse, opaque opcodes. The greater challenge was learning to follow the narrative thread of a program with its many detours and digressions. Instructions such as TC and BZF create branch points, where the path through the sequence of instructions abruptly jumps to some other location, and may or may not return to where it came from. Following the trail can feel like playing Chutes and Ladders.

The learning curve for the AGC assembly language is steep but not very tall, simply because there are so few opcodes. To make sense of the programs, however, you also need to master the conventions and protocols devised by the MIT team to get the most out of this strange little machine. The fragment of source code reproduced on the opposite page provides some examples of these unwritten rules.

I was particularly confused by the scheme for invoking a subroutine—a block of code that can be called from various places in a program and then returns control to the point where it was called. In the AGC the opcode for calling a subroutine is TC, which not only transfers control to the address of the subroutine but also saves the address of the word following the TC instruction, stashing it in a place called the Q register. When the subroutine finishes its work, it can return to the main program simply by executing the instruction TC Q. This much I understood. But it turns out that a subroutine can alter the content of the Q register and thereby change its own return destination. Many AGC programs take advantage of this facility. In the snippet on the opposite page, one subroutine has three return addresses, one for each of three possible responses to a query. Until I figured this out, the code was incomprehensible.

Current norms of software engineering discourage such tricks, because they make code harder to understand and maintain. But software conforming to current standards would not fit in 70 kilobytes of memory.

In contrast to the cryptic opcodes and addresses, another part of the AGC software is much easier to follow. The comments that accompany the code are lucid and even amusing. These annotations were added by the programmers as they created the software. They were meant entirely for human consumption, not for the machine.

Most of the comments are straightforward explanations of what the program does. “Clear bits 7 and 14.” “See if Alt < 35000 ft last cycle.” A few gruff warnings mark code that should not be meddled with. One line is flagged “Don’t Move,” and a table of constants has the imperious heading “Noli Se Tangere” (biblically inspired Latin for “Do Not Touch”). The style of the comments varies from one program to another, presumably reflecting differences in authorship.

Most intriguing are the messages that venture beyond the impersonal, emotionless manner of technical documentation. A nervously apologetic programmer flags two lines of code as “Temporary, I hope hope hope.” A constant is introduced as “Numero mysterioso.” An out-of-memory condition provokes the remark “No room in the inn.” In a few places the tone of voice becomes positively breezy. The passage shown on the opposite page has the following request: “Astronaut: Please crank the silly thing around.” As the program checks to see if the astronaut complied, a comment reads, “See if he’s lying.” One can’t help wondering: Did the astronauts ever delve into the source code? Some of them, most notably Buzz Aldrin, were frequent visitors to the Instrumentation Lab.

Hints of whimsy also turn up in names chosen for subroutines and labels. A section of the software concerned with alarms and failures includes the symbols WHIMPER, BAILOUT, P00DOO, and CURTAINS. Elsewhere we encounter KLEENEX, ERASER, and ENEMA. There are a few Peanuts comic strip references, such as the definition LINUS EQUALS BLANKET. The program that ignites the rocket motor for descent to the Moon is titled BURNBABY, an apparent reference to the slogan “Burn, baby, burn!,” which was associated with the 1965 Watts riots in Los Angeles.

Perhaps I should not be surprised to find these signs of levity and irreverence in the source code. The programmers were mostly very young and clearly very smart; they formed a close-knit group where inside jokes were sure to evolve, no matter how solemn the task. Also, they were working at MIT, where “hacker culture” has a long tradition of tomfoolery. On the other hand, the project was supervised by NASA, and every iteration of the software had to be reviewed and approved at various levels of the federal bureaucracy. The surprise, then, is not that wisecracks were embedded in the programs but that they were not expunged by some humorless functionary.

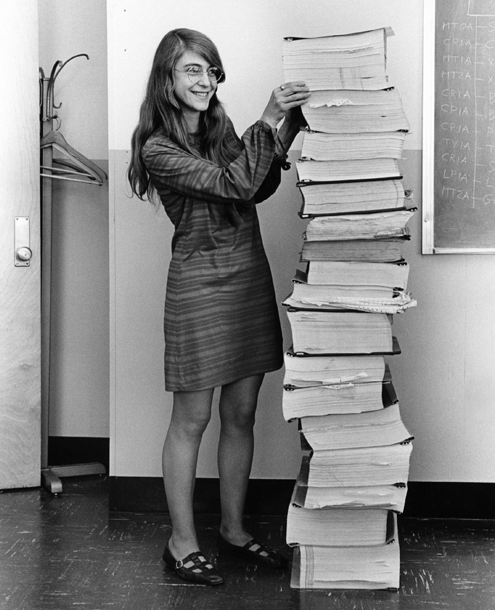

In an email exchange, I asked Margaret H. Hamilton about this issue. A mathematician turned programmer who worked on several other MIT projects before joining the AGC group in 1963, Hamilton later became the lab’s director of software engineering (a term she coined). “People were serious about their work,” she wrote, “but at the same time they had fun with various aspects of comic relief, including things like giving parts of the onboard flight software funny or mysterious names.” She also conceded that NASA vetoed a few of their cheeky inventions.

Hamilton has said that the Apollo project offered “the opportunity to make just about every kind of error humanly possible.” It’s not hard to come up with a long list of things that might have gone wrong but didn’t.

For example, the AGC had two formats for representing signed numbers: ones' complement and twos' complement. Mixing them up would have led to a numerical error. Similarly, spacecraft position and velocity were calculated in metric units but displayed to the astronauts in feet or feet per second. A neglected conversion (or a double conversion) could have caused much mischief. Another ever-present hazard was arithmetic overflow: A number that exceeded the maximum positive value for a 14-bit quantity would “wrap around” to a negative value.

You might suppose that such blunders would never slip through the rigorous vetting process for a space mission, but history says otherwise. In 1996 an overflow error destroyed an Ariane 5 rocket and its payload of four satellites. In 1999 an error in units of measure—pounds that should have been newtons—led to the loss of the Mars Climate Orbiter.

The cramped quarters of the AGC must have added to the programmers’ cognitive burden. The handling of subroutines again provides an illustration. In larger computers, a data structure called a stack automatically keeps track of return addresses for subroutines, even when the routines are deeply nested, with one calling another, which then calls a third, and so on. The AGC had no stack for return addresses; it had only the Q register, with room for a single address. Whenever a subroutine called another subroutine, the programmer had to find a safe place to keep the return address, then restore it afterward. Any mishap in this process would leave the program lost in space.

As an outsider imagining myself writing programs for a machine like this one, the area where I would most fear mistakes is the multitasking mechanism. When multiple jobs needed to be accomplished, the Executive always ran the one with the highest assigned priority. But it also had to ensure that all jobs would eventually get their turn. Those goals are hard to reconcile.

Interrupts were even more insidious. An event in the outside world (such as an astronaut pressing keys on the DSKY) could suspend an ongoing computation at nearly any moment and seize control of the processor. The interrupting routine had to save and later restore the contents of any registers it might disturb, like a burglar who breaks into a house, cooks a meal, and then puts everything back in its place to evade detection.

Some processes must not be interrupted, even with the save-and-restore protocol (for example, the Executive’s routine for switching between jobs). The AGC therefore provided a command to disable interrupts, and another to re-enable them. But this facility created perils of its own: If interrupts were blocked for too long, important events could go unheeded.

The proper handling of interrupts and multitasking remains an intellectual challenge today. These mechanisms introduce a measure of random or nondeterministic behavior: Knowing the present state of the system is not enough to predict the future state. They make it hard to reason about a program or to test all possible paths through it. The most annoying, intermittent, hard-to-reproduce bugs can often be traced back to some unanticipated clash between competing processes.

None of the AGCs ever failed in space, but there were moments of unwelcome excitement. As the Apollo 11 lander descended toward the lunar surface, the DSKY display suddenly announced a “program alarm” with a code number of 1202. Armstrong and Aldrin didn’t know whether to keep going or to abort the landing. At Mission Control in Houston the decision fell to the guidance officer, Steve Bales, who had a cheat sheet of alarm codes and access to backroom experts from both NASA and MIT. He chose “Go.” He made the same decision following each of four further alarms in the remaining minutes before touchdown.

Back at MIT, members of the AGC team were listening to this exchange and scrambling to confirm what a 1202 alarm meant and what might have caused it. The explanatory comment at the appropriate line of the program listing reads “No more core sets.” Every time the Executive launched a new job, it allocated 11 words of read-write memory for the exclusive use of the new process. The area set aside for such core sets had room for just eight of them. If the Executive was ever asked to supply more than eight core sets, it was programmed to signal a 1202 alarm and jump to a routine named BAILOUT.

Smithsonain Air and Space Museum

During the lunar descent, there were never more than eight jobs eligible to run, so how could they demand more than eight core sets? One of those jobs was a big one: SERVICER did all the computations for navigation, guidance, and control. It was scheduled to run every two seconds and was expected to finish its work within that period, then shut down and surrender its core set. When the two-second interval was up, a new SERVICER process would be launched with a new core set. But for some reason the computation was taking longer than it should have. One instance of SERVICER was still running when the next one was launched, forming a backlog of unfinished jobs, all hanging on to core sets.

The cause of this behavior was not a total mystery. It had been seen in test runs of the flight hardware. Two out-of-sync power supplies were driving a radar to emit a torrent of spurious pulses, which the AGC dutifully counted. Each pulse consumed one computer memory cycle, lasting about 12 microseconds. The radar could spew out 12,800 pulses per second, enough to eat up 15 percent of the computer’s capacity. The designers had allowed a 10 percent timing margin.

Much has been written about the causes of this anomaly, with differing opinions on who was to blame and how it could have been avoided. I am more interested in how the computer reacted to it. In many computer systems, exhausting a critical resource is a fatal error. The screen goes blank, the keyboard is dead, and the only thing still working is the power button. The AGC reacted differently. It did its best to cope with the situation and keep running. After each alarm, the BAILOUT routine purged all the jobs running under the Executive, then restarted the most critical ones. The process was much like rebooting a computer, but it took only milliseconds.

Annoying alerts that pop up on the computer screen are now commonplace, but Hamilton points out they were a novelty in the 1960s. The program alarms appearing on the DSKY display were made possible by the priority-driven multitasking at the heart of the AGC software. The alarms took that idea a step further: They had the temerity to interrupt not just other computations but even, when necessary, the astronauts themselves.

Some of the veterans of the AGC project get together for lunch once a month. That they still do so 50 years after the Moon landings suggests how important the Apollo program was in their lives. (It also suggests how young they were at the time.) In 2017 I had an opportunity to attend one of these reunions. I found myself asking the same two questions of everyone I met. First, in that minefield of mistakes-waiting-to-happen, how did you manage to build something that worked so well and so reliably? Second, weren’t you scared witless?

In reply to the latter question, one person at the table described the development of the AGC as “a white-knuckle job.” But others reported they were just too focused on solving technical problems to brood over the consequences of possible mistakes. Blair-Smith pointed out that the informal motto of the group was “We Can Do This,” and it wasn’t just bravado. They had genuine confidence in their ability to get it right.

The question of exactly how they got it right elicited lively discussion, but nothing came of it that I could neatly encapsulate as the secret of their success. They were very careful; they worked very hard; they tested very thoroughly. All this was doubtless true, but many other software projects with talented and diligent workers have run into trouble nonetheless. What makes the difference?

Recalling the episode of the 1202 alarms, I asked if the key might be to seek resilience rather than perfection. If they could not prevent all mistakes, they might at least mitigate their harm. This suggestion was rejected outright. Their aim was always to produce a flawless product.

I asked Hamilton similar questions via email, and she too mentioned a “never-ending focus on making everything as perfect as possible.” She also cited the system of interrupts and priority-based multitasking, which I had been seeing as a potential trouble spot, as ensuring “the flexibility to detect anything unexpected and recover from it in real time.”

In my mind, how they did it remains an open question—and one deserving of scholarly attention. Engineering tradition calls for careful forensic analysis of accidents and failures, but perhaps it would also make sense to investigate the occasional outstanding success.

The Smithsonian Institution’s Air and Space Museum holds some 3,500 artifacts from the Apollo program, but the AGC software is not on exhibit there. A few smaller museums have helped preserve printouts, but the programs are widely available today almost entirely through the efforts of amateur enthusiasts.

In 2003 Ronald Burkey was watching the film Apollo 13, about the mission imperiled by an explosion en route to the Moon. The DSKY appeared in several scenes, and Burkey, who works in embedded computer systems, set out to learn more about the AGC. Casual inquiries gradually transformed into a dogged pursuit of original documents. His aim was to create a simulator that would execute AGC programs.

Burkey learned that the Instrumentation Laboratory had deposited listings of some Apollo 11 software with the MIT Museum, but the terms of the donation did not allow them to be freely distributed. After long negotiations, Deborah Douglas, director of collections at the museum, secured the release of the printouts, and Burkey arranged to have them scanned. Then several volunteers helped with the tedious job of converting 3,500 page images to machine-readable text.

Meanwhile, Burkey was building not only a simulator, called the Virtual AGC, but also a new version of the assembler. (Initially he had no access to the source code for the original assembler, which in any case would not run on modern hardware.) A crucial test of the whole effort was running the transcribed Apollo 11 source code through the new assembler and comparing the binary output with the 1969 original. After a few rounds of proofreading and correcting—some of the scans were barely legible—the old and new binaries matched bit for bit.

In recent years printouts from several other Apollo missions have been made available to Burkey and his collaborators, mostly by members of the MIT team who had retained private copies. Those programs have also been scanned, transcribed, and reassembled. All the scans and the transcribed source code are available at the Virtual AGC website, http://ibiblio.org/apollo. Also posted there are programming manuals, engineering drawings, and roughly 1,400 memos, reports, and other contemporaneous documents.

The Apollo program might be considered the apogee of American technological ascendancy in the 20th century, and the AGC was a critical component of that success. I find it curious and unsettling that major museums and archives have shown so little interest in the AGC software, leaving it to amateurs to preserve, interpret, and disseminate this material. On the other hand, those creative and energetic amateurs have done a brilliant job of bringing the history back to life. Their success is almost as remarkable as that of the original AGC programmers.

Margaret H. Hamilton had no prior experience with computers in 1959 when she took a temporary job as a programmer. She was hired by Edward N. Lorenz, a meteorologist at the Massachusetts Institute of Technology (MIT), who was developing computer simulations of weather patterns. This work would soon lead to Lorenz’s discovery of the “butterfly effect” and the first stirrings of what came to be known as chaos theory. The simulations ran on a primitive computer called the LPG-30, which Hamilton sometimes programmed in raw machine language—the binary or hexadecimal numeric codes representing machine instructions.

Hamilton later worked on a much larger project called SAGE (Semi-Automatic Ground Environment), writing programs for a computer that filled a four-story building. SAGE was the nerve center of a radar network meant to detect long-range bomber attacks. It also became an influential experiment in interactive and real-time computing, where the machine responds to external events as they happen.

By 1963 Hamilton was ready to go back to school. She had a mathematics degree from Earlham College in Indiana and planned to continue her studies in pure mathematics at Brandeis University. But then came the opportunity to work on the Apollo missions. The MIT Instrumentation Laboratory offered her a position programming the onboard computers that would be guide astronauts to the Moon and back.

Initially, Hamilton worked mainly on a subset of programs common to both the command module (CM) and the lunar module (LM) of the Apollo spacecraft, each of which had its own guidance computer. She also made a specialty of methods for detecting and coping with errors. Later she took on wider managerial responsibilities. During the later Apollo flights, she was director of software engineering for all onboard software.

Hamilton remained at the Instrumentation Laboratory (which became the quasi-independent Charles Stark Draper Laboratory) until 1976. In the 1980s she founded Hamilton Technologies, a firm that develops methods for creating highly reliable software, based in part on lessons learned in the Apollo program.

In 1986 Hamilton received the Ada Lovelace Award, established by the Association for Women in Computing. In 2016 she was awarded the Presidential Medal of Freedom. Now in her eighties, she remains CEO of Hamilton Technologies.

The interview below focuses on her years in the Apollo program, for the associated Computing Science column “Moonshot Computing” in celebration of the 50th anniversary of the Apollo missions. It was conducted by email in 2017 and has been edited for clarity and brevity.

Brian Hayes: The Apollo Guidance Computer (AGC) source code makes fascinating reading, partly for what it reveals about the nature of programming 50 years ago, but also because the code offers glimpses of the personalities and the social milieu behind the software. I was surprised to discover a certain playful irreverence in some of the subroutine names (POODOO, WHIMPER, SMOOCH, BURNBABY) and in comments (# SEE IF HE'S LYING, # OFF TO SEE THE WIZARD…). Given that Apollo was such a high-profile, high-stakes project, with the whole NASA bureaucracy looking over your shoulder, I had expected a carefully cultivated attitude of sober professionalism. But you folks seem to have had some fun! Can you tell me about that? Was this just MIT hacker culture on exhibit, or was it unique to the AGC group at the Draper laboratory? (I notice that the AGS software, written at TRW Inc. , seems to be all-business.) Did you get any feedback from higher-ups about it? There must have been an underlying seriousness in your work—you all knew that lives depended on getting it right—which leaves me curious about the comic relief.

Margaret Hamilton: Each software culture has its own unique aspects. And, yes, there was definitely a "certain playful irreverence" that existed within the AGC on-board flight software group. This included the use of "colorful" names in the flight software and in related areas; such as in the requirements documents and operational specifications. Also included were things such as "hidden" messages; messages so tiny that one needed a magnifying glass to find them within the software and related areas. Other messages contained secrets, each of which was known only by its author, some of which will never be found out.

Having begun in the "field" at MIT in 1959 (with experiences before, during, and after the Apollo on-board flight software project), some of the projects I worked on, or with—such as SAGE at Lincoln Labs and the Hackers at Project Mac that were before my Apollo related experiences—were not unlike the AGC group in this respect; others were more like what you describe within the TRW AGS group. More often than not, the best times have had to do with the projects where the people were serious about their work, but at the same time they had fun with various aspects of comic relief, including things like giving parts of the on-board flight software funny or mysterious names.

Speaking of names, my first assignment on Apollo was to write software for unmanned missions. NASA was worried about the possibility of an abort taking place during one of these missions. The question was "what if it aborted?" And everybody said, "It just won't happen." "Oh, well, good. We'll give this one to Margaret because she's a beginner and it's never going to go there anyway, right." So I wrote this program in the software, which I named "Forget It," where it would go to if there was an abort. And sure enough, an unmanned mission aborted and the software went to "Forget It." All of a sudden I became an overnight expert, because I wrote the only program that mattered at that time! Well, fortunately, “Forget It” worked. But the fact that the mission actually aborted—I mean, these guys never thought they were going to make a mistake. So we could all make mistakes, right? Needless to say, no one who was there at that time forgot “Forget It.” Especially me!

Around the same time, I would hear these guys (all guys) talking. And they'd walk around and one of them would say, "How did you solve that problem?" And somebody else would say, "I used the Augekugel method." And I thought to myself, "I never heard of the Augekugel method. I've got to find out what this is. I can't let them know I don't know the thing that they keep talking about all the time." But I couldn't find out on my own what it was. So finally, I asked them, "What is this Augekugel method that you all talk about using when you solve problems?" Turns out it means "eyeballing" in German. These people were also at MIT/IL, but they were hardware engineers, not programmers. So, this kind of humor was part of the overall culture in general at MIT/IL.

Later in time, during the manned missions, I called our NASA contact at 4 AM just after we had completed one of the on-board flight software programs that we considered to be flight ready and therefore officially ready to deliver to NASA. (Several of us were in the process of celebrating the release with a bottle of wine). Out of the names we had come up with that night, we chose a relatively conservative name to recommend to NASA (which was "Gorilla"). Sadly, NASA thought the name was not serious enough for prime time, so we had to change the name after all.

BH: Several spacecraft have been lost or crippled because of software errors, but the Apollo computers managed to keep going through all sorts of unexpected events. What was the secret of that resilience? I know you’ve continued to work on methods for avoiding errors since leaving the Apollo project, so I’m wondering what you see as the most important factors.

MHH: The software experience itself (designing it, developing it, evolving it, watching it perform and learning from it for future systems) was at least as exciting as the events surrounding the missions. Having been through these experiences, one could not help but do something about learning from them. With initial funding from NASA and DoD, we performed an empirical study of the Apollo effort that resulted in a theory that has continued to evolve, based on lessons learned from Apollo and later projects. From its axioms we derived a universal systems language together with its automation and its preventative systems and software paradigm.

We learned from our ongoing analysis that the root problem with traditional languages and their environments is that they support users in “fixing wrong things up” rather than in “doing things in the right way in the first place"; that traditional systems are based on a curative paradigm, not a preventative one. It became clear that the characteristics of good design (and development) could be incorporated into a language for defining systems with built-in properties of control. This is how the Universal Systems Language (USL) came about.

Whereas most errors are found (if they are ever found) during the testing phase in traditional developments, with this approach, correct use of the language prevents ("eliminates") errors "before the fact." Much of what seems counterintuitive with traditional approaches becomes intuitive with a preventative paradigm: The more reliable a system, the higher the productivity in its development; less testing becomes necessary with the use of each new preventative capability.

We continue to discover new properties in systems defined with the language. Once we discovered that there are no interface errors in a system defined with it, it became clear that this property was the case with its derivatives as well (its software being one of them)—and integration within a definition, and from systems to software, is inherent. Which means, among other things, that software systems can be automatically generated from their definitions (including USL's own automation, called the 001 tool suite), inheriting all of the properties of the definitions from which they came. Properties of preventative systems are especially important from the perspective of a system's real-time, asynchronous, distributed behavior. Such a system inherently lends itself to being able to detect and automatically recover from errors in real time.

For each new property discovered; that, in essence, "comes along for the ride," there is the realization of something that will no longer be necessary as part of the system's own development process (see Hamilton, M. H., and W. R. Hackler. 2008. Universal Systems Language: Lessons Learned From Apollo. IEEE Computer: December 34–43. http://www.htius.com/Articles/r12ham.pdf).

Within the language, correctness is accomplished by the way a system is defined, having built-in language properties inherent in the grammar; a definition models both its application (for example, an avionics, banking, or cognitive system) and built-in software engineering properties of control into its own life cycle. Mathematical approaches are often known to be difficult to understand and are limited in their use for nontrivial systems. Unlike other mathematically based formal methods, USL extends traditional mathematics (e.g., mathematical logic) with a unique concept of control: Universal real-world properties—such as those related to time and space—are inherent, enabling it to support the definition and realization of any kind or size of system. The formalism along with its mathematical rigor is “hidden” by language mechanisms derived in terms of that formalism.

One might ask "how can one build a more reliable system and at the same time increase the productivity in building it?" Take for example, testing. Correct use of the language prevents ("eliminates") the majority of errors, including all interface errors (more than 75 percent of all errors) within a system model and its derivatives. These errors are typically found (if they are ever found) during the testing phase in traditional developments, and interface errors are usually the most subtle of errors. USL's automation statically hunts down any errors resulting from the incorrect use of USL. When an object type is changed, the status of all of its uses (i.e., the functions/processes interacting with and impacted by objects of that type) are demoted; the processes are then re-analyzed by the analyzer in light of the type changes to reestablish the status of the uses of that type. Testing for integration errors is minimized because of the inherent integration of types-to-types, functions-to-functions, types-to-functions and functions-to-types in the system, resulting in everything inherently working together.

Given a USL system definition, the automation, if asked, will automatically resource allocate and generate much of the design, and automatically generate all of the code for a software system (as well as its documentation); or, the request could as well be for commands to run some other kind of resource, such as a robot. Just as with the systems it is used to develop, USL's automation is completely defined within itself (i.e., using USL), and it is completely and automatically generated (and regenerated) by itself. It therefore has the same properties that all USL-defined systems have. The requirements-analysis component automates the process of going from requirements, to design, to code, and back again. Because it has an open architecture, USL's automation can be configured to generate to one of a possible set of implementations for a resource architecture of choice for a given target environment—for example, an operating system, language, communications, or database package; an internet interface; or the user’s own legacy code.

Maintenance shares the same benefits. The developer doesn’t ever need to change the code, because application changes are made to the USL definition—not to the code—and target architecture changes are made to the generator environment configuration—not to the code. Only the changed part of the system is regenerated and integrated with the rest of the application—again, the system is automatically analyzed, generated, compiled, linked, and executed without manual intervention.

For whatever success I have experienced in "methods for avoiding errors," much of the credit goes to the errors I had the opportunity of having some responsibility in making, without which we would not have been able to learn the things we did. Some were made with great drama and fanfare, and often with a large enough audience to not want such a thing to ever happen again! It is safe to say that the errors that have been prevented from happening would have resulted in even greater drama had we not adhered to a philosophy of prevention "before the fact!"

BH: Could you say something about how work was organized within the AGC software project? The organization charts show a division into groups focused on various functions (navigation, guidance, rendezvous, etc.), but how was work divvied up within the groups? Did people work individually on their own little section of code, or was it a more collaborative process? (I notice that a few sections of code list authors, but most don’t.) How were the pieces brought together? Could you run individual modules through the assembler, or did you have to compile the entire program in one big lump?

MHH: Of course, everyone has a unique perspective on how things were organized within the AGC on-board flight software part of the MIT Apollo project, depending on when a person joined the project, how long one was on the project, what one's experiences were within the "field" before joining the project, what one did while on the project, and where this fit into in the overall structure within the project. Much "knowledge" was passed down (like limericks) from others who were involved in the project in earlier times under different circumstances than when the project was in its "heyday." In fact, my daughter, when she was about 4 or 5 years old, had her own perspective on how things were in the early days. I remember her asking us why the highest-level managers of the Apollo project (the "grey beards") did not do any work; she said "all they do is talk on the phone."

My own perspective of how things were then is a result of many things: My "software engineering" experiences working within the systems-oriented software areas for both the unmanned and manned missions (for both the Command Module, CM, and the Lunar Module, LM); my taking on added responsibilities as more people came aboard, first becoming responsible for all of the systems software, then adding on the responsibility for the CM; and around the time of Apollo 8 becoming responsible for the on-board flight software for all the manned missions (i.e., the software for the LM and the CM, and the flight software's systems-software shared by, and residing within, both the CM and the LM).

The Apollo on-board flight software, itself, was an asynchronous software system (i.e., a multi-programming environment where higher priority jobs interrupt lower priority jobs). The on-board flight software was developed to execute on an operating system that came with the AGC. It should be noted that the AGC on-board flight software was developed by the software group, but the AGC together with its operating system was developed by the hardware group.

As the leader of the team, I was "in charge" of the people within the on-board flight software group (the "software engineering" team) and the on-board flight software itself. In addition to the software developed by our team, there were others whose code fell under our purview. "Outside" code could be submitted to our team from someone in another group to become part of the official on-board flight software program (e.g., from an engineer in the navigation analysis group). Once submitted to our team for approval, code immediately fell under the supervision of our team; it was then "owned by," and updated by, the software engineers to become part of, and integrated with, the rest of the software. As such, it had to go through the strict rules required of all the on-board flight software, enforced by and tested as a system (within a set of integrated systems) by the software engineers who were now in charge of that area of the software and the software areas related to it. This policy was in place to ensure that all the modules within the flight software—including all aspects of the modules such as those related to timing, data and priority—were completely integrated (meaning there would be no interface errors within, between, and among all modules, both during development and in real-time).

The task at hand included developing the CM, the LM, and the systems-software. Updates were continuously being submitted into the software from hundreds of people, over time and over many releases, for each and every mission (when software for one mission was often being worked on concurrently with software for other missions). The task included making sure everything would play together and that the software would successfully interface to, and work together with, all the other systems, including the hardware, peopleware, and missionware for each mission.

The biggest challenge: The astronauts’ lives depended on our systems and software being man-rated. Not only did it have to work, it had to work the first time. Because of the never-ending focus on making everything as perfect as possible, anything to do with the prevention of errors was not only not off the table, but it was top priority both during development and in real-time. Not only did the software itself have to be ultra-reliable, but the software would need to have the flexibility to detect anything unexpected and recover from it in real-time; that is, at any time during the entirety of a real mission. To meet the challenge, the software was developed with an ongoing, overarching focus on finding ways to capitalize on the asynchronous and distributed functionality of the system at large, in order to perfect the more systems-oriented aspects of the flight software.

Our software was designed to be asynchronous to have the flexibility to handle the unpredictable, and so that higher-priority jobs would have the capability to interrupt lower-priority jobs, based on events as they happened, especially in the case of an emergency. This goal was accomplished by our correctly (and wisely) assigning a unique priority to every process in the flight software, to ensure that all the events in the flight software would take place in the correct order and at the right time relative to everything else that was going on. Steps taken earlier within the software to create solutions within an asynchronous software environment became a basis for solutions within a distributed systems-of-systems environment. Although only one process is actively executing at a given time in a multiprogramming environment, other processes in the same system—those sleeping or waiting—exist in parallel with the executing process. With this situation as a backdrop, the priority display mechanisms (called Display Interface Routines) of the flight software were created, essentially changing the man-machine interface between the astronauts and the on-board flight software from synchronous to asynchronous, in which the flight software and the astronauts became parallel processes.

Such was the case with the flight software's error detection and recovery techniques, which included its system-wide “kill and recompute” from a "safe place" restart approach to its snapshot-and-rollback techniques, as well as its priority displays together with its man-in-the-loop capabilities, which allowed the astronauts' normal mission displays to be interrupted with priority displays of critical alarms in case of an emergency. The development and deployment of this functionality would not have been possible without an integrated system-of-systems (and teams) approach to systems reliability, and the innovative contributions made by the other groups to support our systems-software team in making this become a reality. For example, the hardware team at MIT changed their hardware and the mission-planning team in Houston changed their astronaut procedures—both working closely with us—to accommodate the priority displays for both the CM and the LM, for any kind of emergency and throughout any mission. In addition, the people at Mission Control were well prepared to know what to do should the astronauts be interrupted with the priority displays in the case of an emergency.

Because it was not possible (or certainly not practical) on Apollo for us to test the software "before the fact" by ”flying” an actual mission, it was necessary for us to test the software by developing a mix of hardware and digital simulations of every (and all aspects of an) Apollo mission—which included man-in-the-loop simulations (with real or simulated human interaction), and variations of real or simulated hardware and their integration—to make sure that a complete mission from start to finish would behave exactly as expected.

It was a system of checks and balances. Regarding the organization of things involved within the on-board flight software effort, at its highest level, there are two kinds that come to mind. One is the organization of the people involved in the development of the software, and the other is the organization of the software itself. A matrix-management approach was used for the organization of the people involved in the project for the manned missions. There were the line managers, each of whom was in charge of the people who were experts within a particular subject, and there were the project-oriented managers (one for the CM and one for the LM for each mission), each of whom served as a go-between for NASA and MIT.

This PDF is an excerpt from a summary of the MIT-IL Apollo project along with the Lab's official upper management "chart" created around the time of Apollo 8 (note the use of words instead of graphics to describe the organization). The writeup was put together by the Lab's financial people, at the request of MIT and NASA, in order for it to become part of NASA's public celebration of Apollo 11. It was written by MIT-IL for MIT from the perspective of MIT. Note that the on-board flight software group is referred to as Guidance Programs in the "chart," because it was the group responsible for the Apollo Guidance Computer on-board flight software. Eventually, the write-up was included as one of the exhibits, prepared by MIT together with NASA, to become part of the celebration of the Apollo 11 mission for the first landing on the Moon, at the Moon Show, Hayden Gallery, MIT, sponsored by the MIT Committee on the Visual Arts, in September 1969.

The responsibilities of the people within the on-board flight software group (the software engineers) included: the development of software algorithms designed by various senior scientists for the Apollo Command Module and Lunar Lander; the overall design of the structure (the "glue") of the software as an integrated system-of-systems; ensuring that all the modules within the flight software—including all aspects of the modules such as those related to timing, data and priority—were completely integrated; the design and development of the "systems-software"; and the design of the "software engineering" techniques, which included rules, methods, tools, and processes for ensuring that the software being developed would result in an ultra-reliable system (i.e., making sure that the software would have no errors, both during development and in real-time). Because of these requirements, the team developed and evolved "software engineering" techniques for both the development of the software, the testing of the software (included 6 formal levels of testing) within a system-of-systems environment, and the management of evolving and daily releases that contained and documented everyone's most recent changes (and the reason for the changes) for each and every mission. Methods and tools evolved for these kinds of software-management techniques as well. A case in point is when I made a change one day within the systems software (which resided within both the LM and the CM software). Because of an error I made that day, everyone's tests using the new release crashed and there was a very long line in front of my door the next day. I immediately came up with the "new concept" of having everyone (including myself) test their new changes in an off-line version before putting them into the official version.

An invaluable position within the team was that of the "rope mothers." A rope mother was the designated caretaker of all of the on-board flight software code submitted for a particular Apollo mission for either the LM or the CM. For example, one rope mother was the caretaker for the CM software code for Apollo 8, another for the CM for Apollo 11, another for the LM for Apollo 11, etc. A rope mother would monitor, analyze, and eyeball all of the code in his designated area, throughout all of the official on-board flight software releases and their interim updates, from implementation through testing, looking for problems such as violations of coding rules or interface errors. Several rope mothers were in the on-board flight software group at a given time, because software for several missions was usually being developed concurrently. Rope mothers could have been male or female.

Regarding collaboration—whether it took place among coworkers or between the organizations involved, working with others, interaction with others, interfacing to others, learning from one another, working on things together, working out things together—it was more often than not an iterative effort, such as with RAD or spiral development, that was in play. That iteration also happened between developers or between the developers and users (both on a lower and higher level, such as between MIT and NASA). We all worked together amazingly well, no doubt partly because of the dedication of everyone involved (and, as you suggest, the fun we all had during the whole process). And, it was partly because of the evolution of the project based on lessons learned from the mistakes we made and what we did about them to prevent them from happening in the future (such as was the case with the introduction of off-line versions)!

BH: Of course, there’s also the gender question. I’ve read that you were the only woman in the AGC software group for several years, at a time when the group was growing from a few dozen workers to a few hundred. Your colleagues obviously appreciated your abilities, because they put you in charge, and yet no other women were hired. What was going on? I’d love to know what you thought of the situation then, and now.

MHH: During the days of Apollo, my colleagues—including the AGC on-board flight software engineering team, for which I became responsible, and those from other groups (e.g., the hardware people) with whom I interfaced and worked—were mostly male, all of whom I worked with side-by-side to solve challenging problems and meet critical deadlines. I was so involved in what we were doing, technically, that I was oblivious to the fact that I was working with men, or that most of the groups were men. We were all more likely to notice if someone was a “second-floor person,” a "hardware guy,” a “software guy,” a "DAP person,” an "equation guy," a “rope mother (also referred to as an assembly control supervisor),” or a “systems-software person.” If and when something did come up at work that seemed unfair—for example, a woman being compared to a male counterpart—I would find a way to fix it.

I do not remember it being "difficult" at that time, keeping in mind that some things were acceptable then would not be acceptable now, and vice versa. It helps to consider the culture in the 1960s with its Mad Men–like existence. Women were expected and strongly encouraged to stay home, have kids and be the caregivers; men were expected to go to work and be the breadwinners. Women who did work, especially when they had children, were often criticized by both men and women. Banks required a woman to have her husband's permission to take out a loan, whereas a man was not required to have his wife's permission. An excuse often given for why a woman should make less money, for the same position as a man, was that her husband would support her.

Too often it is the symptoms of the problem that are being addressed by well-meaning efforts today, when the real problem has been, and still is, the culture. It is still not uncommon for an organization to pay women lower salaries than men for the same position, and to relegate women to the lower positions in an organization. And if not, women often have to work or fight harder than their male counterparts to be an exception. Most would agree that the field is still dominated by men, and that discrimination does exist. Indeed, some things seem to have gone backwards and are more difficult now than they were in the 1960s. Some ways in which discrimination manifests itself can be quite different today, especially now that we have the internet.

Unfortunately, various types of communication over the internet can serve as convenient places to "hide" in, encouraging "faceless," pervasive practices, making it harder than before to confront those intent on promoting and perpetuating disinformation that can be harmful to those on the receiving end. A case in point is the use of historical revisionism, in any form (or means) conceivable, to minimize (or ignore) the accomplishments of an individual or a group of individuals, a not uncommon practice when it comes to the affect it can have on women and minorities. Solving just this one part of the problem, itself, is indeed a challenge that can only be totally addressed in the large.

One seemingly small event can change everything for better or for worse, because everything is somehow related to everything else. Every event impacts our culture in one way or another, and therefore impacts women and minorities as to whether they can even do something or not; kids might think, well, I can't do that because I’m this or I’m that. Others could be adversely influenced with respect to their treatment of people who are not like them. Until we start making changes, and until our leaders stop admiring people who do things that encourage discriminatory practices, we have a problem.

When the most powerful and influential leaders and organizations in the world treat women and minorities as equals and make it possible for women to hold the highest positions (not "almost" the highest) in their organizations, equal (not "almost" equal) to what is available to men, we all benefit, including the leaders and organizations themselves. When large corporations refuse to conduct business with countries and corporations who do not allow women to have the same rights as men, we all benefit. The more all of us work to uncover, or better yet, prevent "before the fact" discriminatory practices, and the more those in power promote equality and put into effect nondiscriminatory practices, the more we all benefit.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.