This Article From Issue

January-February 2004

Volume 92, Number 1

DOI: 10.1511/2004.45.0

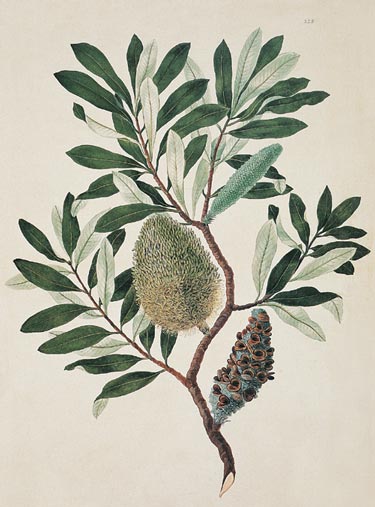

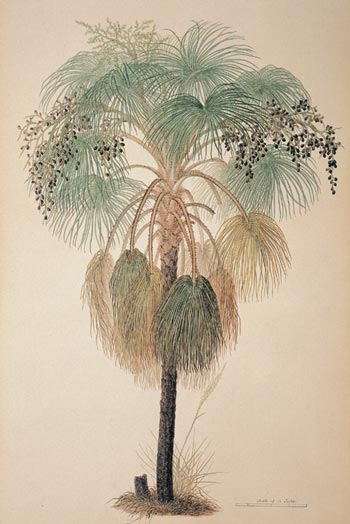

Botanist, taxonomist and plant collector Sandra Knapp has put together an eclectic coffee-table–sized history of plants, flowers and botanical exploration illustrated with glorious color reproductions of paintings from London's Natural History Museum. Plant Discoveries: A Botanist's Voyage Through Plant Exploration (Firefly Books, $60) profiles 20 plant families, including peonies, poppies, roses, irises, tulips, cacti, conifers and daffodils. The coast banksia (Banksia integrifolia) at right was collected at Botany Bay by Joseph Banks and Daniel Solander on Captain Cook's expedition in the 1770s. At left is a fan palm (Livistona humilis) sketched by Ferdinand Bauer in January 1803 at Blue Mud Bay in Australia's Northern Territory.

From Plant Discoveries.

From Plant Discoveries: A Botanist's Voyage Through Plant Exploration.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.