This Article From Issue

May-June 2009

Volume 97, Number 3

Page 244

DOI: 10.1511/2009.78.244

WHAT IS INTELLIGENCE? Beyond the Flynn Effect. James R. Flynn. xii + 216 pp. Expanded edition. Cambridge University Press, 2009. $18.99 paper.

James Flynn is best known for having discovered a stubborn fact. In a series of papers culminating in the classic 1987 article “Massive IQ Gains in 14 Nations: What IQ Tests Really Measure,” he established that in every country where consistent IQ tests have been given to large numbers of people over time, scores have been rising as far back as the records go, in some cases to the early 20th century. What Is Intelligence? is Flynn’s attempt to explain this phenomenon, now known as the Flynn effect.

The makers of IQ tests conventionally begin as follows: They take the scores of a reference sample of test takers, weight them, add them up and transform them to fit a Gaussian probability distribution (the bell-shaped curve), with a fixed mean IQ of 100 and standard deviation of 15. The reference sample is supposed to be representative: In essence, the scores of later test takers are computed by seeing where their raw scores fall in the distribution of the reference sample and reading off the corresponding Gaussian value.

Thus two test takers who give exactly the same answers can get different IQ scores if normed against different reference samples. Test makers periodically renorm their tests, keeping the mean at 100, but the same score can represent very different levels of absolute performance. Flynn examined the raw scores for tests that had kept the same questions over time. He found that scoring 100 today requires more right answers than scoring 100 did in 1950, which in turn required more right answers than doing so had in about 1900. The rate of gain has varied from country to country and from test to test. In some cases there has been a gain of only a few points over a half-century, but in others, IQs have risen by 6 or 7 IQ points per decade.

On average, measured IQ has been rising at roughly 3 points per decade across the industrialized world for as far back as the data go. This means that someone who got a score of 100 on an IQ test in 1900 would get a score of only 70 for the same answers in 2000. This is the Flynn effect.

Flynn easily swats down some proposed explanations for the effect. It is too large, too widespread and too steady to be due to improved nutrition, greater familiarity with IQ tests or hybrid vigor from mixing previously isolated populations. (Nobody seems to have suggested that modern societies have natural or sexual selection for higher IQ, but the numbers wouldn’t add up in any case.) So either our ancestors of a century ago were astonishingly stupid, or IQ tests measure intelligence badly.

Flynn contends that our ancestors were no dumber than we are; rather, most of them used their minds in different ways than we do, ways to which IQ tests are more or less insensitive. That is to say, we have become increasingly skilled at the uses of intelligence that IQ tests do catch. Although he doesn’t put it this way, Flynn thinks that IQ tests are massively culturally biased, and that the culture they favor has been imposed on the populations of the developed countries (and, increasingly, the rest of the world) through cultural imperialism and social engineering.

Flynn cites a hypothetical, but typical, test question: “How are rabbits and dogs alike?” Answers such as “both destroy gardens,” “both are dinner in some countries and pets in others,” or “you can use dogs to hunt rabbits” are true, but the response the IQ testers want is “both are mammals.” The question tests not knowledge of the world or of functional relationships but mastery of particular abstract concepts, which the test makers have themselves internalized as trained scientific professionals and literate intellectuals.

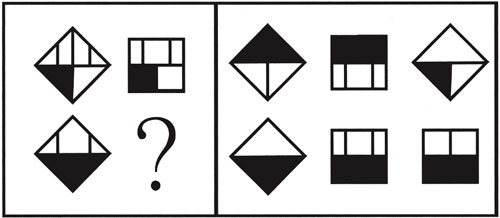

From What Is Intelligence?

IQ tests also reward certain problem-solving abilities—what Flynn calls “problems not solvable by mechanical application of a learned method.” He cites tests of similarities and analogies, and pattern-completion tests, such as Raven’s Progressive Matrices. In the latter, each question is a series of line drawings followed by a collection of drawings from which the test taker must pick the one that completes the sequence. When J. C. Raven developed the test in 1936, he claimed it measured the ability to discover patterns, which was for him the essence of intelligence. Raven’s test is often said (without good evidence) to suffer little or no cultural bias. Yet it is on tests of this type that the Flynn effect is strongest; gains in IQ scores of at least 5 points per decade have been seen. In the Netherlands, for decades all 18-year-old males drafted into the military were given the test, and those who took it in 1982 scored 20 points higher than those who had taken it in 1952.

Scores have risen because our way of thinking has shifted, Flynn says—we have been “liberated from the concrete” and have put on “scientific spectacles.” He claims the Flynn effect is a consequence of changes in the way people live and the skills they cultivate—changes brought about by the Industrial Revolution. We now keep dogs as pets, not to hunt with. At school we learn to read, and also to think abstractly and with a common set of abstractions. Flynn cites the work of A. R. Luria, who claimed, based on fieldwork among peasants and nomads in Uzbekistan in the 1930s, that abstract reasoning skills develop in tandem with literacy, schooling and participation in the modern economy. Luria’s work has flaws (an Uzbekistani peasant with abstract reasoning skills, on meeting a Communist official asking strange and leading questions, would have had good reasons to play dumb), but his findings are broadly consonant with later work on cross-cultural psychology.

There is a broader connection, which Flynn does not make, to work by historians and sociologists on the links between industrialization, nationalism and schooling. Public schools in the United States, for instance, were consciously used to make the country a melting pot, turning descendants of dozens of culturally heterogeneous immigrant groups into a more or less unified people. Similar processes took place across developed countries in the late 19th and early 20th centuries—and in much of the rest of the world later on. Cultural elites turned “peasants into Frenchmen” (in historian Eugen Weber’s phrase)—or Americans, or Uzbeks. Styles of thought previously limited to a small minority of literate specialists were made a part of everyone’s education.

As the sociologist Ernest Gellner emphasized, this was not just an exercise in cultural domination. In industrial societies, people routinely face strangers and novelties. Action cannot be guided by custom, nor can people rely on traditional skills. Workers must deal with machines and written communications, and many need some mastery of the abstract concepts that make technology comprehensible. Everyone encounters large formal organizations, and many people work in them. All of this moved us toward standardized, literate cultures that reward abstract thinking and the willingness and ability to follow general rules.

These transformations did not create new ways of thinking so much as spread ones that had existed for millennia in small pockets. If you had asked a medieval scholar such as Averroës or William of Ockham, “How is a rabbit like a dog?,” he would have replied that both are species of the genus “quadruped animals.” Intellectuals of that era were already “liberated from the concrete,” but their system of abstractions was somewhat different from ours.

Two consequences follow for IQ tests. First, schooling should increase IQ scores. Although Flynn does not address this, the best estimates (for example, those by Christopher Winship and Sanders Korenman) show that, at present in the United States, each additional year of secondary education increases IQ by between 2 and 4 points. (These estimates control for early-childhood IQ.) If this holds over time, then in order to account for the long-term IQ gains of the Flynn effect, U.S. educational attainment would have to have risen by one year per decade (slightly more than it actually did).

Second, gains on IQ tests should vary depending on the content of the tests, being highest on those that rely most on mastering abstract taxonomies and solving problems on the spot. This is precisely what we find. Gains are lowest on items that test vocabulary, arithmetic and general information (for example, “On what continent is Argentina?”).

That such trivia-quiz questions appear on IQ tests at all brings us to the title of Flynn’s book. He begins well, correctly saying that the task is to take a pretheoretical notion of intelligence and shape it into something that is a part of a properly explanatory mechanism. His pretheoretical notion is that “intelligence” roughly means how well and how quickly someone learns—that is, intelligent people learn better and faster. This intuition is plausible but is not compelling. John Dewey, for instance, said intelligence was the “capacity to estimate the possibilities of a situation and to act in accordance with [that] estimate.” This is the intelligence of Odysseus, the man who is never at a loss, and it leads to a rather different theory. After all, “Those who learn best and fastest are the ones who always know what to do” is not a tautology!

Even granting Flynn’s definition, it doesn’t follow that intelligence is a single attribute. Learning better or faster depends on what’s being learned, on what’s already known, on how people try to learn, on how (or whether) others teach them, and so forth. Flynn knows this, and says intelligence consists of the combination of “mental acuity,” “habits of mind,” “attitudes,” “knowledge and information,” “speed of information processing” and “memory.” He also says that, in a narrow sense, intelligence is just mental acuity, “the ability to provide on-the-spot solutions to problems we have never encountered before.” There may actually be one such ability, completely independent of problem content; however, this is not obvious.

The trouble is that Flynn basically stops with this list, which only gets us from “Jack scored well and solved many problems because he is very intelligent” to “Jack solved many problems because he has lots of ability to provide solutions to problems.” As an explanation, this is no better than saying, with the doctors in Molière’s comedy Le malade imaginaire, that opium causes sleep because of its “dormitive power.” Such statements at most point to what needs explaining.

Flynn’s attempt to explicate intelligence is unsatisfactory, but it does suggest what is relevant to a good explanation. This is far superior to the long-standing tendency in IQ research to rely on statistical methods, especially factor analysis, to determine what matters. Factor analysis takes variables that are correlated with one another and constructs new, unobserved variables—“factors”—that can reproduce the observed correlations. The model supposes that the observed variables are directly correlated with the factors, and only indirectly correlated with one another. One can thus reduce many measured values to estimates of a few factors, without losing information about the correlations.

The components of IQ tests are all positively correlated. Factor analysis produces a “general factor,” g, with subtests more or less positively correlated with it. To simplify slightly but not unfairly, what currently makes something acceptable as an IQ test or test question is that it correlates sufficiently strongly with things already accepted as IQ tests or test questions, and so with g.

Factor analysis is harmless as data reduction, but it is tempting to “reify” the factors, to suppose that they are the hidden causes behind the observations. It’s a temptation that many IQ testers have failed to resist. Flynn protests the “conceptual imperialism” of g, correctly insisting that these techniques at most discern correlation arising from complicated mixtures of our current social arrangements and actual functional or causal relationships between mental abilities. Factor analysis cannot undo this mixing and gives no reason to expect that “factor loadings” will persist. Indeed, the pattern of Flynn-effect gains on different types of IQ test is basically unrelated to the results of factor analysis.

Ultimately the enterprise rests on circularities. It’s mathematically necessary that any group of positively correlated variables has a “positively loaded” general factor. But positive correlation does not imply common causation. Since IQ test questions are selected to be positively correlated, the appearance of g in factor analyses just means the calculations were done right. The only parts that are not mathematical tautologies or truths by construction are the facts that (1) it is possible to assemble large batteries of positively correlated questions, and (2) test scores correlate with nontest variables, although more weakly than one is often led to believe. Flynn, however, seems to attribute too much inferential power to factor analysis, even though he correctly says that it has contributed little to our understanding of the brain or cognition.

Given Flynn’s idea that intelligence is how well and quickly we learn, IQ tests are an odd way to measure it. They do not set learning tasks and measure performance within a fixed time. At best they gauge past learning, which can indirectly measure the capacity to learn quickly and well but would be confounded with things like executive function and current and past motivation. Experiments show that on Raven’s Progressive Matrices test, a quarter of an hour of motivational priming can be worth a decade or more of the Flynn effect.

The reader may protest that, surely, at least the mathematical questions on IQ tests are objective. This mistakes the issue. If asked to continue the sequence “1, 1, 2, 3, 5,” many people would recognize the Fibonacci sequence and say “8.” But there are infinitely many other sequences where the next number is 7 (for example, “pick the largest prime number less than or equal to the sum of the previous two numbers”), or even 11 (“pick the smallest prime number greater than or equal to the sum of the previous two numbers”). What’s tested by Raven’s Progressive Matrices is not how well you can find patterns, but how well you can find the patterns Raven liked. In either case, responding appropriately requires certain culturally transmitted cognitive tools—and the motivation to use them on command.

My rephrasing of Flynn in terms of cultural bias and imperialism may have given the wrong impression. (I admit to some deliberate provocation.) I am committed to the kind of culture IQ tests favor, as I suspect are most of the readers of this review. Progress of many kinds is difficult or impossible without scientific knowledge and the habits of abstract thought that go with it. Spreading this kind of thinking is a Good Thing, and worth great efforts. Yet it’s also true that thinking this way presupposes a specific kind of culture, and it is a mistake to confuse our favorite mental exercises with intelligence as such.

That mistake is particularly tempting because of how we use IQ tests. Up through the 19th century, members of elites mostly viewed democracy with emotions that ranged from ambivalence to terror, even in France and the United States. They saw the masses as incapable of thinking, let alone leading. Meritocracy was a later compromise with democracy: There would still be elite leaders, but they would be chosen on the basis of talent rather than birth. This ideal helped institutionalize IQ testing and reliance on that modified IQ test, the SAT.

Flynn’s arguments suggest that these fears and hopes were at most half right. The masses were not bad at thinking, or at managing their own affairs; they were just bad at thinking like intellectuals. Meritocracy, as Flynn says, is an incoherent ideal—even if we agreed on “merit,” and allocated rewards on that basis, the winners would use some of their resources to give their children unfair advantages. But spreading educational opportunities and opening up positions of influence to broader peaceful competition has been widely beneficial.

If Flynn is right, knowing how many picture-puzzles different cohorts of Dutch teenagers could solve is actually a window through which we can see a momentous change, the “liberation from the concrete,” not just among a few clerics and scribes, but as the common condition of humanity. It would almost be damning this book with faint praise to say that it’s a valuable addition to the IQ debate (although it is); it’s an important take on what we have made of ourselves over the past few centuries and might yet make of ourselves in the future.

Cosma Shalizi is an assistant professor in the statistics department at Carnegie Mellon University and an external professor at the Santa Fe Institute. He is writing a book on the statistical analysis of complex systems models. His blog, Three-Toed Sloth, can be found at http://bactra.org/weblog/.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.