This Article From Issue

July-August 2021

Volume 109, Number 4

Page 206

There is perhaps no more stereotypical image of science run amok than Frankenstein’s monster, created by a man who manipulates life without regard to the ethical consequences. And there is certainly no field of science more likely to evoke that stereotype—in news stories and in popular protests—than biotechnology. Researchers in biotech really do combine cells from human and nonhuman species (hybrids known as chimeras) and engineer stem cells that can transform into other cell types, potentially even into human embryos. Insoo Hyun, the director of research ethics at the Harvard Medical School Center for Bioethics and a professor of bioethics at Case Western Reserve University School of Medicine, works to dispel myths about biotechnology and to develop standards for doing research in a scientifically productive but responsible way. He spoke with special issue editor Corey S. Powell about lessons from past controversies and about the potentials and concerns that lie ahead. This interview has been edited for length and clarity.

Steve Lipofsky, Lipofsky Photography

What are the most pressing issues in bioethics today?

Bioethics is a broad field! In general, classic research ethics and institutional review boards often deal with what we call natural kinds, those involving typical living entities such as ourselves, animals, and embryos. In my little corner of bioethics, I deal with the impact of new biotechnologies—technologies surrounding entities that do not have straightforward analogs in nature and therefore do not neatly fit in the existing categories of research ethics. Take, for example, research using self-organizing embryo-like models or the integration of human cells into pigs. How much leeway do researchers have with what they have created and what they can do with it? There needs to be a system of oversight and care taken in knowing what is going on in research institutions, what is allowed and what is not. This goes all the way to the general public, because those ethical questions will start to arise from them, too.

Some of the questions bioethicists such as myself ask are foundational ones of oversight, such as what committee should review the work in question, what kinds of questions should the committee ask, and what counts as approval or disapproval. There are also more philosophical questions revolving around, for instance, the moral status of new biological entities created through genetic engineering and biotechnology. Science needs public support, so an important element in bioethics is communication. Overreactions and misinformation could lead to restrictive policies that might prematurely truncate progress in the field.

There had been a lot of public debate about the use of stem cells for medical research, but recently that has died down. Was the scientific hype justified? What about the ethical concerns?

Most of the initial stem cell research was aimed at understanding the basis of development and the causes of disease at the cellular level. What got the attention of the public was the idea that such technology could one day provide replacement cells for patients with, for example, diabetes—it offered hope. On the other side of the coin, because these cells initially originated from human embryos, this technology was closely linked to pro-life and abortion debates. Because there was a lack of federal funding and support at the federal level for this research, we ended up having a war in the trenches between the cheerleaders and those who demonized their use. How did it get resolved? By developing alternative technologies based on those original studies that used human embryonic stem cells.

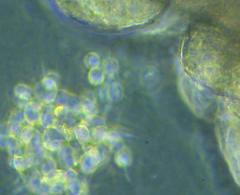

From a bioethical perspective, the introduction of induced pluripotent stem cells (iPSCs)—embryonic-like stem cells generated from adult cells—resolved many of the controversies. We now have the technology to reprogram an adult skin cell into a stem cell and then manipulate a bunch of those together into something that looks like and starts to behave like an embryo. Nonetheless, there are still scenarios in which specific research questions can be answered only with human embryonic stem cells, such as when studying very early human development. We could not have created iPSCs without the knowledge gained from human embryonic stem cells, so a lingering moral relationship exists between the two. But because we can now make stem cells that are not of embryonic origin, the focus now is not on where the cells came from, but on what they can become and do.

Recently there have been ethical controversies over chimeras—engineered animals that contain cells from another species, even humans. What are the implications of chimera research?

These studies have a wide range of goals. Chimeras can be used to study the development and fate of the newly introduced stem cells within the animal model, or to model diseases that are normally specific to humans, and do it in the context of a whole organism. These animal models can be used for more translatable research, such as for preclinical testing of stem cell–based therapeutic products, such as lab-grown pancreatic cells before human trials—or, in a more pie-in-the-sky scenario, to fully understand how to grow a variety of replacement tissues in vitro. The idea here is similar to 3D printing: If you understand how something is made from scratch, then you can write the instructions to make it happen. In the future, one goal is to grow transplantable organs generated from human stem cells in livestock animals for future engraftment in patients. Chimeras, like iPSCs, will continue to be surveyed through the lens of bioethics, since their future use and the implications of potential technologies based on them are subject to similar controversies.

What do you see as the biggest areas of ethical concern with human genetic engineering?

We can categorize gene editing into three broad categories. They differ not only in the aims of the technology used, but also in the level of ethical issues they raise. The first category is somatic cell gene editing, which involves changing the genetics of a living person in some particular part of their body, such as a genetically defective liver. For example, a patient may get some kind of genetic change for their own benefit—such as to overcome sickle cell disease—but that change cannot be passed on to future generations. Then you have two forms of germline engineering: one that is an in vitro–only method for research purposes, where embryos are genetically modified in a dish but not implanted in a womb, and another that is the reproductive use of germline modification to make babies that can avoid inherited disorders.

Most people would categorize their ethical concerns as increasing in the order listed here, but I would argue to reorder the controversy. I think that somatic cell engineering deserves a lot more ethical scrutiny and discussion than it currently is being given. In that realm, you have the possibility of unexpected outcomes in clinical trials and terrible things happening to real people like Jesse Gelsinger [see Treat Human Subjects with More Humanity ]. Meanwhile, the third category— generating engineered offspring—is often considered the most controversial one, but its practice is heavily restrained by funding agencies and is punishable by law in most countries.

You have been exploring the ethics surrounding another fairly new biotechnology: brain organoids, complexes of neural cells grown in the lab. What are the most immediate concerns with them?

There are a few. Organoids need to be validated as models of human development, and one way to do that is by comparing them with primary fetal tissue. Whether you directly generate the data from such tissue or use solely the published data, organoid models need that validation. In order for brain organoid research to thrive, there needs to be alongside it the possibility of fetal tissue procurement and usage, and that can be subject to legal restrictions influenced by, for example, political administrations.

Another issue is communicating organoid research to the public. The researchers who work with brain organoids are people too. Sometimes they have to interact with tissue donors and their families—when, for example, there is a need for cells from a patient with a known or unknown neurological disorder to develop a brain organoid model from them. Researchers need to answer their questions about these brain organoids and what they can do for others affected by similar disorders. There are a lot of emotions built into these interactions, and some researchers are not yet prepared for these discussions.

Lastly, there are the issues of the motivations behind the wide array of things researchers can do with the organoids. Some researchers want to study how brains develop; others want to use organoids for downstream applications, such as pairing them with devices to model human neural networks through computer engineering. When the research starts pushing more heavily into the engineering side, new ethical questions arise about defining the boundaries and limits on what can be made [see companion essay Minibrains: What's in a Name? ].

How do you decide at what point a biological possibility becomes an issue that requires ethical regulation—for instance, in the case of human stem cell–based embryo modeling?

It is hard to know exactly when you have reached that point, because in this example the only way you can definitively answer whether an embryo-like model is developmentally competent is to transfer it into a womb. But no institutional research committee would allow that, not even in nonhuman primates. Or the U.S. Food and Drug Administration would intervene, as this would be a highly manipulated construct aimed, in this case, at clinical purposes. At least in the United States, the most one can do is infer the potentials from other data—from what you can analyze from the embryo model alone.

In addition, the National Institutes of Health follow the Dickey-Wicker Amendment, which prohibits federal funding for any experiments in which human embryos are created, harmed, or destroyed. The definition of human embryo under this amendment is very broad and basically includes anything that could generate offspring—whether a product of fertilization, cloning, or other biotechnological approaches, or an entity made from human diploid cells. The last item would include iPSCs or an embryo model. If the entity has developmental potential, then it follows the current policies regulating embryo research using in vitro fertilization techniques. This definition is not limited to the United States; many countries are defining a human embryo not by how you created it, but by what it has the potential to become.

More fundamentally, how do you develop an ethical framework for truly new biomedical technologies?

When you do bioethics, you can do it either at the scholarly or at the policy level. At the scholarly level, I can sit in my office, read philosophical and scientific literature, and come up with the best arguments for how to think about these embryo models, say, and their moral status, and I can generate my conclusions. All you are doing here is analyzing arguments and writing solutions that put forward your own thinking on the particular issue. Then you publish your article and other people discuss it. That approach is fine, but the results do not always impact policy making. Another bioethical approach—one that usually impacts policy—is to work in teams with other experts from different disciplines to arrive at practical guidelines or a broad ethical framework to guide the pursuit of science in a particular area. I have had the pleasure of working on both of these bioethical approaches—the scholarly and the policy levels. For example, the International Society for Stem Cell Research just released new research guidelines for 2021 on chimeras, embryo models, and genome editing, among many other issues. I had the pleasure of working closely with a global team of science and ethics experts and even got to do some really interesting philosophizing in the process.

What’s an example of an ethical success story—ethics standards that helpfully guided a new biotechnology?

A good example revolves around the human cloning debate in 2005, where researchers hoped to use cloning techniques to generate stem cells that were genetically matched to particular patients. This would have involved the transfer of a patient’s DNA into an unfertilized human egg that had had its maternal nucleus removed. But back then, scientists did not have enough eggs for any kind of research, because we were not allowed to pay women for research participation—for donating the eggs—and no participant would do it for free. Women who had experience donating their eggs at in vitro fertilization clinics knew that they would get a minimum of $5,000 from couples trying to conceive. These women knew they could get paid for their time, effort, and inconvenience, but only if they went through that very same egg procurement process for reproductive use. This seemed unfair to me. In 2006, I wrote a commentary in Nature in which I argued that there’s good reason to pay healthy volunteers for research egg donation, the same as you would pay anybody else who volunteers for an invasive procedure for basic research.

Many questioned my argument, as there were a few U.S. state laws against compensation for research egg donors, and the National Academy of Sciences had established that such pay was unethical, but I wanted to at least air out the rationale for what I believed. The result? The State of New York used my commentary to help come up with their own policies, allowing compensation for time, effort, and inconvenience to research egg donors. That ended up having an impact on science, because some people were persuaded—and they moved a variety of scientific research forward that depends on the procurement and use of human eggs for basic research.

In your work, you have to confront both personal morality and institutional morality. Do you find it difficult to navigate between the two?

There is not a sense of disconnect between my academic work and how I feel about the limitations that are set in policy. There is, however, one good exception. This is the 14-day limit on embryo research, which prohibits scientists from culturing human embryos in a dish beyond two weeks of continuous development in their laboratories. Some colleagues and I published an article recently advocating for letting some researchers have an exception to the rule and explore just a little beyond that, in slow increments. The current 14-day limit was established in the early 1980s when in vitro fertilization was just beginning and the human stem cell technology just was not there. Now, some 40 years later, this 14-day limit merits reevaluation. We recommend keeping the 14-day limit as a default policy and, for example, allowing a few qualified teams with close monitoring to explore just a bit beyond, report back the results, and then decide whether it is worth it to keep going. I do not know whether anyone is going to take that on as a policy, but I do think that science policy makers have to think about it.

An important takeaway, especially important for scientists to understand, is that good guidelines and ethical standards do not get in the way of science. They help pave the way. The 14-day limit is a great example. Back in the early ‘80s it was key at the beginning of in vitro fertilization and human embryo research to have such boundaries, because it carved out a playing field. Without those guidelines and the 14-day limit, we would not have had human embryonic stem cell research in the ‘90s and the knowledge that came from it. With our latest article on revisiting the 14-day limit, I also think this is an area where people might now consider revising existing policies. When done right, policy can help pave the way for more good science.

When you try to anticipate new bioethical concerns, how far into the future do you look?

I typically like to go in increments of five years. Although science moves quickly, one can get too far ahead, overreacting to possible but improbable future scenarios, which can hinder the future of science. For example, when Dolly, the first cloned sheep, was born in 1998, people freaked out and erroneously imagined that it would be just a short matter of time to go from sheep to human cloning. But what nobody realized was that it is not that simple to go from the sheep model to human cloning. In fact, developing a cloning procedure for nonhuman primates took well over a decade. Yet, there were immediate moves in some countries in 1998 to outlaw any type of human cloning, with restrictive, ill-defined policies. Those regulations were broadly defined and impacted cloning for basic research purposes.

At the International Society for Stem Cell Research, we are looking only five years out because if we try to determine guidelines based on what could happen—without knowing whether those scenarios are even possible—we might truncate research by restricting freedom of scientific exploration. For rapidly moving biotechnologies like the ones we are discussing here, it is best to proceed with guidelines that are flexible and responsive to the science, ones that can be fine-tuned in real time.

A podcast interview with the researcher:

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.