This Article From Issue

July-August 2014

Volume 102, Number 4

Page 249

DOI: 10.1511/2014.109.249

In this roundup, associate editor Katie L. Burke summarizes notable recent developments in scientific research, selected from reports compiled in the free electronic newsletter Sigma Xi SmartBrief. Online: https://www.smartbrief.com/sigmaxi/index.jsp

How Eyes Sense Motion

Neuroscientists have known that the retina can perceive motion, but no one knew how. Unable to map the neuronal circuitry, neuroscientists at the Massachusetts Institute of Technology created a citizen-science, brain-mapping game called EyeWire. Players traced the pathways of nerves from electron microscope images of a mouse retina and mapped the wiring of the neurons. This game playing showed that a cell that relays information to the optic nerve is connected to two types of another nerve cell, which link up photoreceptors with these relaying cells, one type connected near the cell body and the other further from it. The first type transmits signals with a time lag. Thus, even though two nearby photoreceptors detect an object moving across a visual field at slightly different times, the two signals reach the relaying cell at the same instant because of the time delay. The authors suggest that this cell fires when receiving these dual signals, relaying to the brain that something is moving in the direction of the two connecting cells. This study paves the way for experiments testing this model.

Kim, J. S., et al. Space–time wiring specificity supports direction selectivity in the retina. Nature 509:331–336 (May 15)

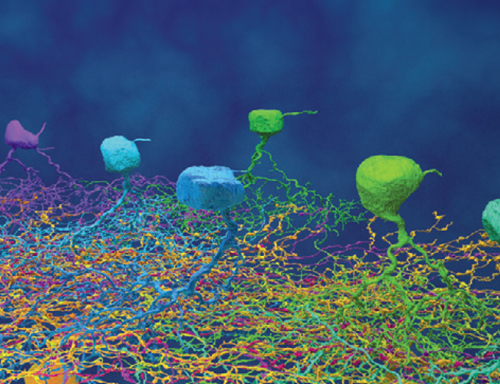

Cause of the Permian Extinction

The largest mass extinction, occurring 252 million years ago at the end of the Permian Period, before dinosaurs walked the Earth, wiped out approximately 95 percent of all life. The cause of the event has long been a topic of debate. The extinctions coincided with increased volcanic activity in Siberia, but evidence has not supported this as a sole cause. MIT’s Daniel Rothman and colleagues presented compelling evidence that an unprecedented increase in methane-producing microbes is the cause. They showed that the methanogenic archaea acquired the ability to break down acetate, which had built up in sediments, through gene transfer from a bacterium about 250 million years ago, just before the mass extinction. Using a mathematical model, they showed that methane and other gases could have increased exponentially during this timeframe, pointing to microbial activity rather than volcanoes as the source. Further, the eruptions released nickel, a limiting nutrient for methanogens, into the sediment deposits. The unrestrained microbial growth could have induced anoxic, acidic conditions in the oceans and toxic levels of hydrogen sulfide in the atmosphere, resulting in the die-offs.

Rothman, D. H., et al. Methanogenic burst in the end-Permian carbon cycle. Proceedings of the National Academy of Sciences of the U.S.A. doi:10.1073/pnas.1318106111 (Published online March 31)

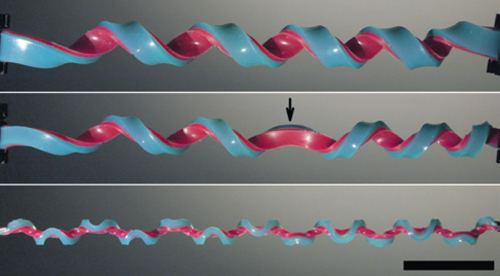

A New Hemihelix Shape

While studying how to make two-dimensional springs, Harvard University researchers were surprised to generate a shape they’d never seen before, hemihelices exhibiting multiple reversals in the direction of spiral. Hemihelices can be formed by holding one end of a helical telephone cord in place while twisting the other end in the opposite direction to its spiral. In nature, plant tendrils and wool fibers can form such shapes. To understand them, the researchers stretched, joined, and released strips of a stretchy polymer. They found that the height-to-width ratio of a strip’s cross-section predicts the transition from a helix to a hemihelix and the number of reversals in spiral direction—a wider strip will produce a helix. The researchers note that hemihelices with multiple reversals have not been observed before because most human-made materials will break before achieving the strains necessary to induce these shapes.

Liu, J., J. Huang, T. Su, K. Bertoldi, and D. R. Clarke. Structural transition from helices to hemihelices. PLoSOne doi:10.1371/journal.pone.0093183 (April 23)

Krypton in Ancient Ice

Air bubbles trapped in ice provide many clues to Earth’s climate record. The oldest ice cores discovered so far are 800,000 years old. Oregon State University’s Christo Buizert and colleagues used a new radiometric dating technique to accurately age samples of ice from near the surface of Taylor Glacier in Antarctica to 120,000 years old. This new technique uses krypton-81, which has a much longer decay rate than the commonly used carbon-14, presenting the potential to go back 1.5 million years. Krypton is a noble gas found in trace amounts in the atmosphere, and krypton-81 is formed when cosmic rays collide with krypton isotopes. Until 2011, radiokrypton dating was intractable, because of the sheer amount of ice required to detect such trace amounts. Advances in detector technology reduced the amount of ice required to the feasible, though large, amount of 350 kilograms. This new method opens the possibility of studying the transition from shorter to longer glacial cycles.

Buizert, C., et al. Radiometric 81Kr dating identifies 120,000-year-old ice at Taylor Glacier, Antarctica. Proceedings of the National Academy of Sciences of the U.S.A. doi:10.1073/pnas.1320329111 (Published online April 21)

Minke Whales Say “Quack”

Seafarers have been puzzled for decades by a sound picked up in the oceans around Antarctica, fondly called the “bio-duck.” The noises are prevalent throughout the Southern Ocean and also around western Australia. Marine biologist Denise Risch of NOAA Northeast Fisheries Science Center unwittingly discovered that the sound came from Antarctic minke whales (Balaenoptera bonaerensis) when she and her colleagues tracked two with tags that included underwater microphones. The discovery opens up new possibilities for tracking these whales and also presents a way to retroactively study their behavior from past recordings.

Risch, D., et al. Mysterious bio-duck sound attributed to the Antarctic minke whale (Balaenoptera bonaerensis). Biology Letters 10:20140175 (April 23)

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.