Searching for Dark Matter

By Daniel Bauer

Dark matter makes up the majority of material in the universe

Dark matter makes up the majority of material in the universe

Matter that does not produce or absorb light—dark matter—is everywhere in the universe. In the early 1930s, astronomer Fritz Zwicky of the California Institute of Technology noticed gravitational effects—unexpected velocities of galaxies in clusters—that could not be explained by the amount of normal matter, the stuff that makes up planets, stars, and us. Zwicky proposed that there was a large amount of dark matter in these clusters causing the unusual velocities. The idea wasn’t given much credence in the scientific community until the 1970s, when astronomers led by Vera Rubin at the Carnegie Institution began to notice similar effects in the velocities of stars on the outskirts of individual galaxies.

Today, most scientists accept the hypothesis that the majority—probably about 85 percent—of the matter in the universe is dark matter. Still, we don’t know anything about the makeup of dark matter, except that it is not the same as normal matter. It doesn’t emit or absorb light. It only interacts with normal matter through gravity and possibly another, very weak type of interaction. Consequently, particle theorists have come up with many hypotheses that might explain dark matter, all of which require the existence of new, stable, electrically neutral particles beyond those in the so-called Standard Model of particle physics.

Greg Stewart/SLAC National Accelerator Laboratory

The most accepted dark-matter model relies on what are called Weakly Interacting Massive Particles (WIMPs), although another top modeling prospect includes dark-matter particles of lower masses than WIMPs. But no matter their mass, if such dark-matter particles were created in the Big Bang, they would have provided the gravitational seeds that permitted the formation of galaxies. Simulations of dark matter produce a universe with clusters and superclusters of galaxies that is very much like what we see in our own.

The combination of astronomical evidence and theoretical models for dark matter has led particle physicists to conduct experiments that have attempted to directly detect dark-matter particles. The most likely way to detect a WIMP is to investigate whether it scatters elastically—like a billiard ball—when it hits a nucleus in an atom. Thus, if one could measure the energy of a single recoiling nucleus in a detector, without interference from any other background particles that might have caused such a recoil, one could claim evidence for detection of a dark-matter particle.

In practice, detecting dark matter is very challenging. For example, many sources of background particles—such as radioactive decay of normal matter and cosmic-ray interactions in or near the detectors—can mimic a nucleus recoiling from being hit by a particle of dark matter. So, direct-detection experiments have to be located in deep underground laboratories to avoid the cosmic rays; the experiments must be shielded from the byproducts of radioactive decay; and investigators must be able to distinguish nuclear recoils from the much more common electronic recoils produced by background interactions. Scientists started creating the required facilities in the 1990s.

The Cryogenic Dark Matter Search (CDMS), on which I work, was one of the first series of experiments to meet these challenges. It uses ultrapure germanium and silicon detectors cooled to temperatures near absolute zero to detect the heat, or phonons, and ionization—charge—liberated by single-particle interactions in crystals that are roughly the size and shape of hockey pucks. An interaction with the nucleus does not produce as much ionization as does an interaction of the same energy with atomic electrons. This provides an experimental technique for distinguishing the signature of WIMPs, as opposed to background particles, hitting the detectors. The initial experiment was carried out at a shallow site on the campus of Stanford University and ran between 1996 and 2002, but ultimately it was limited by cosmic-ray background interference.

A second-generation experiment, CDMS-II, started operation in 2002 in the Soudan Underground Laboratory, located in a retired iron mine in northern Minnesota. At 713 meters below ground, the depth filtered out most of the cosmic rays. CDMS-II had improved shielding against radioactivity and featured continuous improvement in detector technologies for rejecting residual backgrounds. By the end of CDMS-II in 2015, no unambiguous dark-matter signature had been found, but the rate of WIMP interactions with normal matter was pinned down for much of the possible WIMP mass range.

During the time CDMS-II was operating, other direct-detection experiments were coming online. Many of these used noble liquids—xenon and argon, primarily—that provided much larger target mass and self-shielding, which resulted from the outer parts of the liquid absorbing background radiation, leaving a quiet inner region to detect WIMPs. Some of these noble-liquid experiments surpassed the ability of CDMS-II to detect WIMPs with masses about 10 times greater than that of a proton. Progress will continue in this high-mass region with the Italy-based XENON1T detector (currently operational) and DARWIN (DARk matter WImp search with liquid xenoN, scheduled for operation by 2024), plus LZ, a merger of the LUX (Large Underground Xenon) and ZEPLIN (ZonEd Proportional scintillation in LIquid Noble gases) experiments (scheduled for operation by 2020) in South Dakota (see "First Person: Elena Aprile").

There could be an entire dark universe composed of particles that are currently unknown.

The main problem for dark-matter experiments is that we don’t know what we’re looking for, whether it’s WIMPs or something else. While CDMS-II was operating, in fact, theorists conjectured that there might be an entire dark sector of particles, analogous to the Standard Model of normal-matter particles. Some of these suspected dark-matter particles are not very massive, which adds to the challenges, because the interaction of lightweight dark-matter particles with normal matter produces very small energy depositions in detectors.

An updated detector methodology, dubbed CDMSlite (“lite” stands for low ionization threshold experiment) was developed in 2013 and met the challenge of searching for lightweight dark-matter particles. In this new mode of detector operation, charges liberated by a particle interaction are accelerated by a voltage across the crystal, which leads to a much larger phonon signal. This amplification provides a considerably lower energy threshold and sensitivity to low-energy nuclear (and electron) recoil signatures of interacting lightweight dark matter. In principle, this technique allows detection of a single electron liberated by the interaction of a dark-matter particle, and we have recently realized that possibility in a small prototype detector.

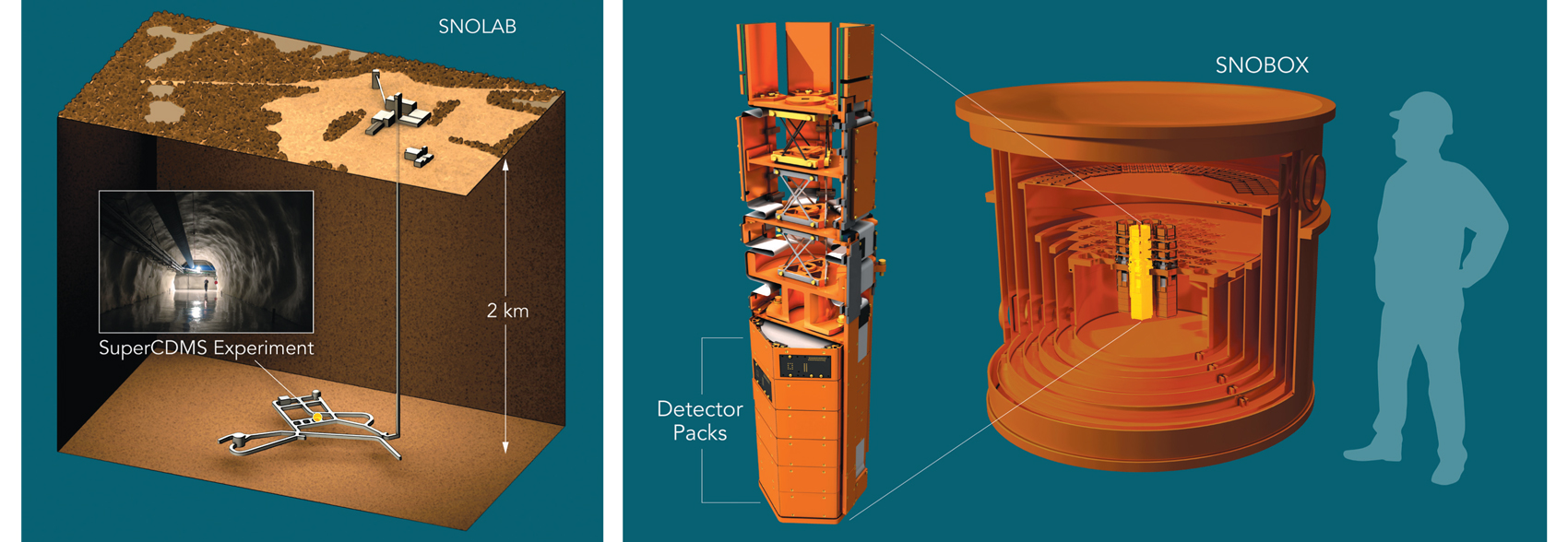

We are now designing and building a third-generation experiment, called SuperCDMS. It will be located 2,070 meters below the surface in a clean-room facility called SNOLAB (Sudbury Neutrino Observatory Laboratory), near Sudbury in Ontario, Canada. This experiment will focus on searches for very light dark-matter particles using the detector techniques pioneered at Soudan. It will also have improved shielding and control of residual radioactivity. An elaborate cryogenics system allows operation of the detectors at temperatures as low as 0.015 kelvins, minimizing thermal interference with the heat signatures from particle interactions.

Greg Stewart/SLAC National Accelerator Laboratory; inset: SNOLAB

A low-noise electronics and data-acquisition system records the tiny signals and makes the data available for offline processing and reconstruction of the particle interactions. Calibration using radioactive sources and neutron beams allow us to understand the detector performance in great detail and compare it with simulations. Using these techniques, we expect to be sensitive to dark-matter interactions at the rate of just a few events per year.

Compared with CDMSlite, SuperCDMS SNOLAB will be an improvement of many orders of magnitude in sensitivity to dark-matter interactions with normal matter and an improvement of about one order of magnitude in the lowest mass dark-matter particle it can detect. Much of this improvement in sensitivity comes directly from reducing background signals and noise.

So far, every search for dark matter has come up empty, and more challenges lie ahead. For instance, dark-matter experiments will eventually run into a background that cannot be reduced. Neutrinos emitted by the Sun and other stars are all around us, but rarely interact with normal matter—most pass directly through the entire Earth. However, the experiments are becoming so sensitive that they will begin to detect the very rare interactions of neutrinos with atomic nuclei, and these are essentially indistinguishable from dark-matter interactions. Some very ingenious experimental techniques will be required to separate dark-matter interactions from this neutrino “fog.”

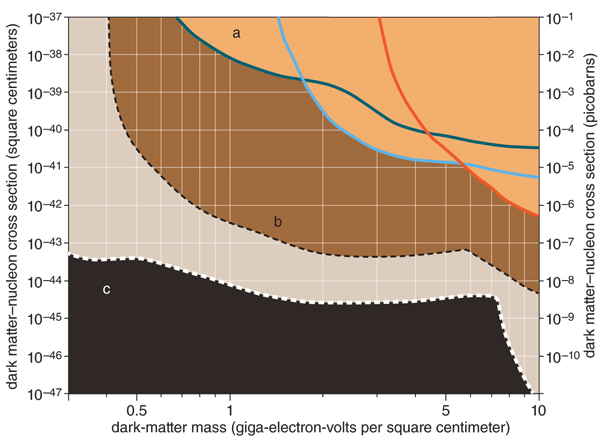

The search for dark matter explores the rate of its interaction with normal matter (y-axis) versus dark-matter particle mass (x-axis). Some experiments have already ruled out certain areas of this graph (a), and the SuperCDMS SNOLAB experiment will search for less massive particles of dark matter that interact even less frequently with normal matter (b). As detectors get even more sensitive, solar neutrinos will create large levels of background (c); if that’s where dark-matter particles exist, scientists will need to develop new instrumentation and data analysis to find them.

Data from SuperCDMS collaboration.

And so, the search goes on, because detection of dark-matter particles would exceed the excitement of anything that has emerged from particle physics in the past 20 years, including the discovery of the Higgs particle. The main purpose is to gain an understanding of the matter that comprises 85 percent of the universe. Once we directly determine how dark matter interacts with normal matter in a nongravitational way, we will also gain an understanding about the larger-scale structures that we see in the universe.

Finally, a discovery of particle dark matter would certainly change our conceptions of particle physics, especially if there is a whole sector of dark-matter particles. There could be an entire dark universe composed of particles that are currently unknown. Those of us working on the next- generation experiments fervently hope that this new frontier will become accessible to us within the next decade.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.